Anyway.. after you have Java installed, you can grab SonarCube here: https://www.sonarqube.org/downloads/

Create a folder named sonarqube-7.6 on the C drive, download SonarCube and extract it in C:\sonarqube-7.6\

Next we need Sonar Cube Scanner, You can download it here: https://docs.sonarqube.org/display/SCAN/Analyzing+with+SonarQube+Scanner

Create a folder name C:\sonar-scanner-cli-3.3.0.1492-windows and extract the Sonar Cube Scanner file there

Finally we need the sonar-tsql-plugin, you can download that here https://github.com/gretard/sonar-tsql-plugin/releases

Grab the file named: sonar-tsqlopen-plugin-0.9.0.jar and download it

Place the jar file in the folder C:\sonarqube-7.6\sonarqube-7.6\extensions\plugins\

Now it's time to create some environmental variables. In an explorer window, paste this into an address bar

Control Panel\System and Security\System

Click on Advanced System Settings, click on Environment Variable, click on new

In the variable name add SONAR_RUNNER_HOME

In the variable value add C:\sonar-scanner-cli-3.3.0.1492-windows

It will look like this

There is one more thing to do, we need to add something to the path

On older versions of windows... add the line below at the end of the path variable, on newer versions, just click on New and paste the line below

;%SONAR_RUNNER_HOME%\bin;

Ok time to run (and fail) SonarQube finally

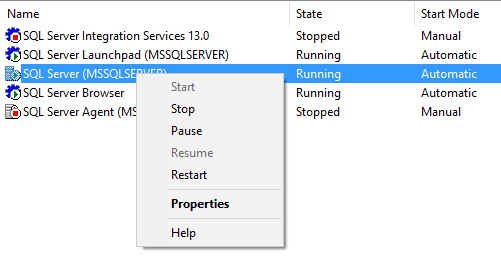

Go to the folder C:\sonarqube-7.6\sonarqube-7.6\bin\windows-x86-32 and kick off the script StartSonar.bat

If you get an error about 32 or 64 bit, then run the script from the windows-x86-64 folder

If you run the script, if you are lucky, you won't get an error, but if you do is it this one?

jvm 1 | Error: missing `server' JVM at `C:\Program Files (x86)\Java\jre1.8.0_201\bin\server\jvm.dll'.

jvm 1 | Please install or use the JRE or JDK that contains these missing components.

C:\sonarqube-7.6\sonarqube-7.6\bin\windows-x86-32>StartSonar.bat

wrapper | ERROR: Another instance of the SonarQube application is already running.

Press any key to continue . . .

C:\sonarqube-7.6\sonarqube-7.6\bin\windows-x86-32>StartSonar.bat

wrapper | --> Wrapper Started as Console

wrapper | Launching a JVM...

jvm 1 | Wrapper (Version 3.2.3) http://wrapper.tanukisoftware.org

jvm 1 | Copyright 1999-2006 Tanuki Software, Inc. All Rights Reserved.

jvm 1 |

jvm 1 | 2019.02.12 13:22:02 INFO app[][o.s.a.AppFileSystem] Cleaning or creating temp directory C:\sonarqube-7.6\sonarqube-7.6\temp

jvm 1 | 2019.02.12 13:22:02 INFO app[][o.s.a.es.EsSettings] Elasticsearch listening on /127.0.0.1:9001

jvm 1 | 2019.02.12 13:22:02 INFO app[][o.s.a.p.ProcessLauncherImpl] Launch process[[key='es', ipcIndex=1, logFilenamePrefix=es]] from [C:\sonarqube-7.6\sonarqube-7.6\elasticsearch]: C:\Program Files (x86)\Java\jre1.8.0_201\bin\java -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=75 -XX:+UseCMSInitiatingOccupancyOnly -XX:+AlwaysPreTouch -server -Xss1m -Djava.awt.headless=true -Dfile.encoding=UTF-8 -Djna.nosys=true -Djdk.io.permissionsUseCanonicalPath=true -Dio.netty.noUnsafe=true -Dio.netty.noKeySetOptimization=true -Dio.netty.recycler.maxCapacityPerThread=0 -Dlog4j.shutdownHookEnabled=false -Dlog4j2.disable.jmx=true -Dlog4j.skipJansi=true -Xms512m -Xmx512m -XX:+HeapDumpOnOutOfMemoryError -Delasticsearch -Des.path.home=C:\sonarqube-7.6\sonarqube-7.6\elasticsearch -cp lib/* org.elasticsearch.bootstrap.Elasticsearch -Epath.conf=C:\sonarqube-7.6\sonarqube-7.6\temp\conf\es

jvm 1 | 2019.02.12 13:22:02 INFO app[][o.s.a.SchedulerImpl] Waiting for Elasticsearch to be up and running

jvm 1 | Error: missing `server' JVM at `C:\Program Files (x86)\Java\jre1.8.0_201\bin\server\jvm.dll'.

jvm 1 | Please install or use the JRE or JDK that contains these missing components.

So to quickly fix this create a server folder in the java bin location from the error message

Now grab the files from the client folder and copy them to the server folder

jvm 1 | 2019.02.12 13:25:53 INFO app[][o.s.a.SchedulerImpl] Process[ce] is up

jvm 1 | 2019.02.12 13:25:53 INFO app[][o.s.a.SchedulerImpl] SonarQube is up

Navigate to http://localhost:9000/ login with admin for username and password

Now we need to do one more thing and we are ready, open notepad or you favorite text editor, paste the following

# Required metadata sonar.projectKey=StaticCodeAnalysis.project sonar.projectName=Static Code Analysis project sonar.projectVersion=1.0 sonar.sources=StoredProcedures,UserDefinedFunctions sonar.host.url=http://localhost:9000 #sonar.exclusions=**/bin/**/*.*,**/obj/**/*.*,**/*.sqlproj # Comma-separated paths to directories of source codes to be analyzed. # Path is relative to the sonar-project.properties file. # Replace "\" by "/" on Windows. # Since SonarQube 4.2, this property is optional. # If not set, SonarQube starts looking for source code # from the directory containing the sonar-project.properties file. # Language sonar.language=tsql #Encoding of the source code #sonar.sourceEncoding=UTF-8

Save that as sonar-project.properties in the folder where your code is located, in our case it is in C:\temp

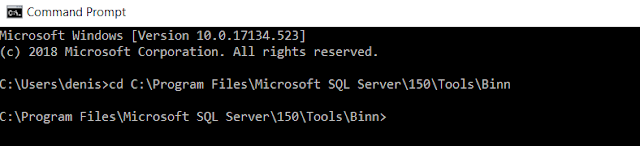

Open a command window, cd to the C;\temp folder, and paste following

C:\sonar-scanner-cli-3.3.0.1492-windows\sonar-scanner-3.3.0.1492-windows\bin\sonar-scanner.bat

You should see something like the following

C:\temp>C:\sonar-scanner-cli-3.3.0.1492-windows\sonar-scanner-3.3.0.1492-windows\bin\sonar-scanner.bat

INFO: Scanner configuration file: C:\sonar-scanner-cli-3.3.0.1492-windows\sonar-scanner-3.3.0.1492-windows\bin\..\conf\sonar-scanner.properties

INFO: Project root configuration file: C:\temp\sonar-project.properties

INFO: SonarQube Scanner 3.3.0.1492

INFO: Java 1.8.0_121 Oracle Corporation (64-bit)

INFO: Windows 10 10.0 amd64

INFO: User cache: C:\Users\denis\.sonar\cache

INFO: SonarQube server 7.6.0

INFO: Default locale: "en_US", source code encoding: "windows-1252" (analysis is platform dependent)

INFO: Load global settings

INFO: Load global settings (done) | time=78ms

INFO: Server id: BF41A1F2-AWji9AZ8kkLV5J16bA1i

INFO: User cache: C:\Users\denis\.sonar\cache

INFO: Load/download plugins

INFO: Load plugins index

INFO: Load plugins index (done) | time=31ms

INFO: Load/download plugins (done) | time=47ms

INFO: Process project properties

INFO: Execute project builders

INFO: Execute project builders (done) | time=0ms

INFO: Project key: StaticCodeAnalysis.project

INFO: Base dir: C:\temp

INFO: Working dir: C:\temp\.scannerwork

INFO: Load project settings

INFO: Load project settings (done) | time=16ms

INFO: Load project repositories

INFO: Load project repositories (done) | time=47ms

INFO: Load quality profiles

INFO: Load quality profiles (done) | time=63ms

INFO: Load active rules

INFO: Load active rules (done) | time=1922ms

INFO: Load metrics repository

INFO: Load metrics repository (done) | time=32ms

WARN: SCM provider autodetection failed. Please use "sonar.scm.provider" to define SCM of your project, or disable the SCM Sensor in the project settings.

INFO: Language is forced to tsql

INFO: Indexing files...

INFO: Project configuration:

INFO: 23 files indexed

INFO: Quality profile for tsql: Sonar Way

INFO: ------------- Run sensors on module Static Code Analysis project

INFO: Sensor JaCoCo XML Report Importer [jacoco]

INFO: Sensor JaCoCo XML Report Importer [jacoco] (done) | time=0ms

INFO: Sensor MsIssuesLoaderSensor [tsqlopen]

INFO: Found 0 issues

INFO: Sensor MsIssuesLoaderSensor [tsqlopen] (done) | time=15ms

INFO: Sensor CodeGuardIssuesLoaderSensor [tsqlopen]

INFO: SQL Code guard path is empty, trying to search directories instead

INFO: Found 0 issues

INFO: Sensor CodeGuardIssuesLoaderSensor [tsqlopen] (done) | time=0ms

INFO: Sensor CustomChecksSensor [tsqlopen]

WARN: Property 'sonar.tsql.customrules.paths' is not declared as multi-values/property set but was read using 'getStringArray' method. The SonarQube plugin declaring this property should be updated.

INFO: Total 1 custom rules repositories with total 15 checks

INFO: Sensor CustomChecksSensor [tsqlopen] (done) | time=21548ms

INFO: Sensor CoverageSensor [tsqlopen]

INFO: Sensor CoverageSensor [tsqlopen] (done) | time=16ms

INFO: Sensor JavaXmlSensor [java]

INFO: Sensor JavaXmlSensor [java] (done) | time=0ms

INFO: Sensor HTML [web]

INFO: Sensor HTML [web] (done) | time=15ms

INFO: Sensor Zero Coverage Sensor

INFO: Sensor Zero Coverage Sensor (done) | time=16ms

INFO: ------------- Run sensors on project

INFO: No SCM system was detected. You can use the 'sonar.scm.provider' property to explicitly specify it.

INFO: 21 files had no CPD blocks

INFO: Calculating CPD for 2 files

INFO: CPD calculation finished

INFO: Analysis report generated in 250ms, dir size=127 KB

INFO: Analysis report compressed in 51ms, zip size=40 KB

INFO: Analysis report uploaded in 47ms

INFO: ANALYSIS SUCCESSFUL, you can browse http://localhost:9000/dashboard?id=StaticCodeAnalysis.project

INFO: Note that you will be able to access the updated dashboard once the server has processed the submitted analysis report

INFO: More about the report processing at http://localhost:9000/api/ce/task?id=AWjjFKvAkkLV5J16bDDx

INFO: Analysis total time: 26.228 s

INFO: ------------------------------------------------------------------------

INFO: EXECUTION SUCCESS

INFO: ------------------------------------------------------------------------

INFO: Total time: 28.017s

INFO: Final Memory: 36M/1173M

INFO: ------------------------------------------------------------------------

C:\temp>

When you get the prompt back, it's time to go to the http://localhost:9000/projects URL

You should have 1 project there, the name matches what we had in our properties file

sonar.projectName=Static Code Analysis project

When you click on the project, you will get some information about bugs, code smells and duplication

Clicking on code smells brings back the following.. you can then act on those or not

I added 2 of my own bad procs to see what it would flag

create proc BadProc as select * from Person.Address order by 2,3

I also added this one Unique_Login_IPs, you can grab it here https://social.msdn.microsoft.com/Forums/en-US/55cfe1b0-402a-4468-bf7a-cc0966d4a487/faster-way-to-do-this

As you can see we got some warnings for those procs

SELECT *.. No need to comment on this one

No ASC/DESC in the order by... this defaults to ASC anyway but I guess for clarity it's better to specify ASC

Positional reference is used... I do this all the time with ad-hoc queries but I don't do it with procs

Non-sargeable argument found - column referenced in a function.

That is this line

WHERE (method = 'LOGIN') AND (YEAR(logged) = @year1) AND (MONTH(logged) = 3)) as tmpy1_3

This you would ideally rewrite by doing something like first creating the variable @startdate and let it have the value @year.03/01 in other words '20190301'

Then the WHERE clause would be something like that

WHERE (method = 'LOGIN') AND logged >= @startdate and logged < dateadd(mm,1,@startdate)

That is all for this post. Of course you can do some of this stuff with other tools and even with policy management. But if you use SQL Server and many languages, you could do static code analysis from one tool