With the CTP2 build of SQL Server 2017, you now have the ability to use SQL Graph

I decided to play around with it a little, I based the code here on the sample I found in Books On Line. To follow along, first create this database, you have to be on SQL Server 2017 CTP2 or higher in order for the code to work

CREATE DATABASE GraphDBTest; GO USE GraphDBTest; GO

In SQL Graph, you have node tables and edge table, here is the description for both tables from Books On Line

A node table represents an entity in a graph schema. Every time a node table is created, along with the user defined columns, an implicit $node_id column is created, which uniquely identifies a given node in the database. The values in $node_id are automatically generated and are a combination of object_id of that node table and an internally generated bigint value

It is recommended that users create a unique constraint or index on the $node_id column at the time of creation of node table, but if one is not created, a default unique, non-clustered index is automatically created.

An edge table represents a relationship in a graph. Edges are always directed and connect two nodes. An edge table enables users to model many-to-many relationships in the graph. An edge table may or may not have any user defined attributes in it. Every time an edge table is created, along with the user defined attributes, three implicit columns are created in the edge table:

| Column name | Description |

|---|---|

$edge_id | Uniquely identifies a given edge in the database. It is a generated column and the value is a combination of object_id of the edge table and a internally generated bigint value. However, when the $edge_id column is selected, a computed value in the form of a JSON string is displayed. $edge_id is a pseudo-column, that maps to an internal name with hex string in it. When you select $edge_id from the table, the column name will appear as $edge_id_\<hex_string>. Using pseudo-column names in quereis is the recommended way of querying the internal $edge_id column and using internal name with hex string should be avoided. |

$from_id | Stores the $node_id of the node, from where the edge originates. |

$to_id | Stores the $node_id of the node, at which the edge terminates. |

Similar to the $node_id column, it is recommended that users create a unique index or constraint on the $edge_id column at the time of creation of the edge table, but if one is not created, a default unique, non-clustered index is automatically created on this column. It is also recommended, for OLTP scenarios, that users create an index on ($from_id, $to_id) columns, for faster lookups in the direction of the edge.

Here is what a node table looks like, the only difference is the AS NODE at the end

CREATE TABLE Person ( ID INT PRIMARY KEY, name VARCHAR(100) ) AS NODE;

Inserting into a node table is same as inserting into a regular table

INSERT INTO Person VALUES (1,'Denis'); INSERT INTO Person VALUES (2,'Shidhar'); INSERT INTO Person VALUES (3,'Geoff'); INSERT INTO Person VALUES (4,'Kevin'); INSERT INTO Person VALUES (5,'Liz'); INSERT INTO Person VALUES (6,'Melanie');

Let's see what the output looks like

SELECT * FROM Person

| $node_id_A0703BD81C174451BEC7688009010A5B | ID | name |

| {"type":"node","schema":"dbo","table":"Person","id":0} | 1 | Denis |

| {"type":"node","schema":"dbo","table":"Person","id":1} | 2 | Shidhar |

| {"type":"node","schema":"dbo","table":"Person","id":2} | 3 | Geoff |

| {"type":"node","schema":"dbo","table":"Person","id":3} | 4 | Kevin |

| {"type":"node","schema":"dbo","table":"Person","id":4} | 5 | Liz |

| {"type":"node","schema":"dbo","table":"Person","id":5} | 6 | Melanie |

As you can see, there is JSON in the $node_id column

The sys.tables view has 2 new columns, is_edge and is_node. If we query the sys.tables view now for the Person table we just created, you will see that is_node = 1

SELECT t.is_edge,t.is_node,* FROM sys.tables t WHERE name = 'Person'

Here is the result

| is_edge | is_node | name | object_id | schema_id | type_desc | create_date |

| 0 | 1 | Person | 901578250 | 1 | USER_TABLE | 2017-05-09 08:55:30.513 |

Now we will add another table, this table will have some data about DB products

CREATE TABLE DBProduct ( ID INT NOT NULL, name VARCHAR(100) ) AS NODE; INSERT INTO DBProduct VALUES (1,'SQL Server'); INSERT INTO DBProduct VALUES (2,'Cassandra'); INSERT INTO DBProduct VALUES (3,'Oracle'); INSERT INTO DBProduct VALUES (4,'FoundationDB'); INSERT INTO DBProduct VALUES (5,'MongoDB'); INSERT INTO DBProduct VALUES (6,'Vertica'); INSERT INTO DBProduct VALUES (7,'Volt');

And now it is time for our edge tables, we will have 2 of them, a likes table and a friendOf table

CREATE TABLE likes (rating INT) AS EDGE; CREATE TABLE friendOf AS EDGE;

To insert into the likes table, we have to say which person likes what product. The person will go into the $from_id column, the DBProduct will got into the $to_id column. You $Node_id to grab the id to populate this table

The node_id for Person with an id of 1 will go into the $from_id column, the node_id for DBProduct with an id of 1 will go into the $to_id column. That looks like this

((SELECT $node_id FROM Person WHERE id = 1), (SELECT $node_id FROM DBProduct WHERE id = 1),5);Here are the insert statements to populate this table

INSERT INTO likes VALUES ((SELECT $node_id FROM Person WHERE id = 1), (SELECT $node_id FROM DBProduct WHERE id = 1),5); INSERT INTO likes VALUES ((SELECT $node_id FROM Person WHERE id = 1), (SELECT $node_id FROM DBProduct WHERE id = 2),15); INSERT INTO likes VALUES ((SELECT $node_id FROM Person WHERE id = 2), (SELECT $node_id FROM DBProduct WHERE id = 1),6); INSERT INTO likes VALUES ((SELECT $node_id FROM Person WHERE id = 3), (SELECT $node_id FROM DBProduct WHERE id = 3),7); INSERT INTO likes VALUES ((SELECT $node_id FROM Person WHERE id = 4), (SELECT $node_id FROM DBProduct WHERE id = 4),8); INSERT INTO likes VALUES ((SELECT $node_id FROM Person WHERE id = 5), (SELECT $node_id FROM DBProduct WHERE id = 5),9);

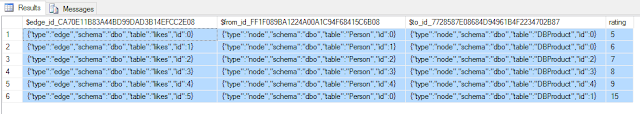

Let's look at what it looks like in the likes edge table

SELECT * FROM likes

Here is what it looks like, as you can see it is a bunch of JSON.. (click on the image for a bigger version)

Now we will also insert some data into the friendOf table, it is the same as with the likes table, lookup the node ids and insert the data

INSERT INTO friendof VALUES ((SELECT $NODE_ID FROM person WHERE ID = 1), (SELECT $NODE_ID FROM person WHERE ID = 2)); INSERT INTO friendof VALUES ((SELECT $NODE_ID FROM person WHERE ID = 1), (SELECT $NODE_ID FROM person WHERE ID = 5)); INSERT INTO friendof VALUES ((SELECT $NODE_ID FROM person WHERE ID = 2), (SELECT $NODE_ID FROM person WHERE ID = 3)); INSERT INTO friendof VALUES ((SELECT $NODE_ID FROM person WHERE ID = 3), (SELECT $NODE_ID FROM person WHERE ID = 1)); INSERT INTO friendof VALUES ((SELECT $NODE_ID FROM person WHERE ID = 4), (SELECT $NODE_ID FROM person WHERE ID = 2)); INSERT INTO friendof VALUES ((SELECT $NODE_ID FROM person WHERE ID = 5), (SELECT $NODE_ID FROM person WHERE ID = 4));

Now it is time to run some queries

This query will return all the products that Denis likes

-- Find DBProducts that Denis likes SELECT DBProduct.name FROM Person, likes, DBProduct WHERE MATCH (Person-(likes)->DBProduct) AND Person.name = 'Denis';

name

-------------

SQL Server

Cassandra

Not surprisingly, SQL Server is in that list

What about my friends? What DB products do they like?

-- Find DBProducts that Denis' friends like SELECT DBProduct.name as DBName,person2.name FROM Person person1, Person person2, likes, friendOf, DBProduct WHERE MATCH(person1-(friendOf)->person2-(likes)->DBProduct) AND person1.name='Denis';

DBName name

--------------- ------------

SQL Server Shidhar

MongoDB Liz

Okay, SQL Server and MongoDB, I can live with that

What about Liz, what does her friend Kevin like?

SELECT DBProduct.name as DBName,person2.name FROM Person person1, Person person2, likes, friendOf, DBProduct WHERE MATCH(person1-(friendOf)->person2-(likes)->DBProduct) AND person1.name='Liz';

DBName name

------ -------

FoundationDB Kevin

FoundationDB? Didn't Apple buy that and then shut down the downloads?

So what product is there that both friends like?

To do that we specify that person1 is a friendOf person 2

and both person1 and person2 like the same product. Since you cannot use the same table twice, you need to use an alias, in this case I have used l2 as an alias for the likes table

SELECT DBProduct.name as DBName,person1.name,person2.name FROM Person person1, Person person2, likes, friendOf, DBProduct, likes as l2 WHERE MATCH(person1-(friendOf)->person2-(likes)->DBProduct AND person1-(l2)->DBProduct)

DBName name name

-----------

SQL Server Denis Shidhar

What a surprise, can you believe it, it is SQL Server!!

Let's take a look at some meta data, this time, we are adding some columns from the sys.columns view, I have added graph_type_desc and is_edge

select t.name, c.name, c.graph_type_desc,t.is_edge,t.is_node from sys.columns c join sys.tables t on c.object_id = t.object_id where graph_type is not null order by t.name, c.name

Here is the output from that query

| name | name | graph_type_desc | is_edge | is_node |

| DBProduct | $node_id_4C8D1144EF7745FD8BACDB7178353A65 | GRAPH_ID_COMPUTED | 0 | 1 |

| DBProduct | graph_id_8FA26FC01AE94CF9921364ECEFB71621 | GRAPH_ID | 0 | 1 |

| friendOf | $edge_id_FBBA05CB4E0F49E7919EC346DBB21F92 | GRAPH_ID_COMPUTED | 1 | 0 |

| friendOf | $from_id_143FE9B1B3E942EB99FF8396B292CD19 | GRAPH_FROM_ID_COMPUTED | 1 | 0 |

| friendOf | $to_id_DFF0DCFA07FE45E3A31E1925F5E6F886 | GRAPH_TO_ID_COMPUTED | 1 | 0 |

| friendOf | from_id_71FB1C6C6D82428889276A07A9AF32E4 | GRAPH_FROM_ID | 1 | 0 |

| friendOf | from_obj_id_4310B8C357D641EC990E91329687BDAA | GRAPH_FROM_OBJ_ID | 1 | 0 |

| friendOf | graph_id_3722B217CA404686BE44855E883AA6FA | GRAPH_ID | 1 | 0 |

| friendOf | to_id_D01976015F664F91A0A29819D79EE6A5 | GRAPH_TO_ID | 1 | 0 |

| friendOf | to_obj_id_8C26534B00DF47FBA87FBED6D14C3201 | GRAPH_TO_OBJ_ID | 1 | 0 |

| likes | $edge_id_CA70E11B83A44BD99DAD3B14EFCC2E08 | GRAPH_ID_COMPUTED | 1 | 0 |

| likes | $from_id_FF1F089BA1224A00A1C94F68415C6B08 | GRAPH_FROM_ID_COMPUTED | 1 | 0 |

| likes | $to_id_7728587E08684D94961B4F2234702B87 | GRAPH_TO_ID_COMPUTED | 1 | 0 |

| likes | from_id_ACD968F7815C4A3A9B5B6C5506235EDE | GRAPH_FROM_ID | 1 | 0 |

| likes | from_obj_id_185C6B5EB64F4C0A8E3E1CF65092F706 | GRAPH_FROM_OBJ_ID | 1 | 0 |

| likes | graph_id_16575BE2C0764232B5B440524166DF15 | GRAPH_ID | 1 | 0 |

| likes | to_id_D164826C35374D3E98EDBC3FA77457B3 | GRAPH_TO_ID | 1 | 0 |

| likes | to_obj_id_373354D4D7014EC89771C48F961561BB | GRAPH_TO_OBJ_ID | 1 | 0 |

| Person | $node_id_A0703BD81C174451BEC7688009010A5B | GRAPH_ID_COMPUTED | 0 | 1 |

| Person | graph_id_40FFD8418C1343639EA62CA66B6CE414 | GRAPH_ID | 0 | 1 |

You might have noticed that SQL Graph queries use old style joins

So instead of this

SELECT DBProduct.name as DBName,person1.name,person2.name FROM Person person1, Person person2, likes, friendOf, DBProduct, likes as l2

I can do this right?

SELECT DBProduct.name as DBName,person1.name,person2.name FROM Person person1 CROSS JOIN Person person2 CROSS JOIN likes CROSS JOIN friendOf CROSS JOIN DBProduct CROSS JOIN likes as l2

Yes that works, both queries return 54432 rows

Will it work with the WHERE clause?

SELECT DBProduct.name as DBName,person1.name,person2.name FROM Person person1 CROSS JOIN Person person2 CROSS JOIN likes CROSS JOIN friendOf CROSS JOIN DBProduct CROSS JOIN likes as l2 WHERE MATCH(person1-(friendOf)->person2-(likes)->DBProduct AND person1-(l2)->DBProduct)

Msg 13920, Level 16, State 1, Line 164

Identifier 'person1' in a MATCH clause is used with a JOIN clause or APPLY operator. JOIN and APPLY are not supported with MATCH clauses.

Msg 13920, Level 16, State 1, Line 164

Identifier 'friendOf' in a MATCH clause is used with a JOIN clause or APPLY operator. JOIN and APPLY are not supported with MATCH clauses.

Msg 13920, Level 16, State 1, Line 164

Identifier 'person2' in a MATCH clause is used with a JOIN clause or APPLY operator. JOIN and APPLY are not supported with MATCH clauses.

Msg 13920, Level 16, State 1, Line 164

Identifier 'person2' in a MATCH clause is used with a JOIN clause or APPLY operator. JOIN and APPLY are not supported with MATCH clauses.

Msg 13920, Level 16, State 1, Line 164

Identifier 'likes' in a MATCH clause is used with a JOIN clause or APPLY operator. JOIN and APPLY are not supported with MATCH clauses.

Msg 13920, Level 16, State 1, Line 164

Identifier 'DBProduct' in a MATCH clause is used with a JOIN clause or APPLY operator. JOIN and APPLY are not supported with MATCH clauses.

Msg 13920, Level 16, State 1, Line 165

Identifier 'person1' in a MATCH clause is used with a JOIN clause or APPLY operator. JOIN and APPLY are not supported with MATCH clauses.

Msg 13920, Level 16, State 1, Line 165

Identifier 'l2' in a MATCH clause is used with a JOIN clause or APPLY operator. JOIN and APPLY are not supported with MATCH clauses.

Msg 13920, Level 16, State 1, Line 165

Identifier 'DBProduct' in a MATCH clause is used with a JOIN clause or APPLY operator. JOIN and APPLY are not supported with MATCH clauses.

Boooo ... that is a big fat nope

Also keep the following in mind

There are certain limitations on node and edge tables in this release:

- Local or global temporary tables cannot be node or edge tables.

- Table types and table variables cannot be declared as a node or edge table.

- Node and edge tables cannot be created as system-versioned temporal tables.

- Node and edge tables cannot be memory optimized tables.

- Users cannot update the $from_id and $to_id columns of an edge using UPDATE statement. To update the nodes that an edge connects, users will have to insert the new edge pointing to new nodes and delete the previous one.

This was just a first look at Graph SQL, I will have to play around with it a little more to get more familiar with it and see if there are any use cases for me

In the meantime, visit Books On Line and start playing around with SQL Graph: https://docs.microsoft.com/en-us/sql/relational-databases/graphs/sql-graph-overview