In my

The best thing about the PASS summit for me is... post I said that I would do the following

I will also do a series about new stuff in SQL Server 2016, but that will be in between all the other posts. Whenever someone asked during a session how many people were already on SQL Server 2016, very few hands went up, this is the reason, I want to blog about this as well.

For all these series of posts, I plan to have a repo on github with a yet to be determined name. Then I will have all the SQL code for all the series organized there

This is the first post in that series

You can find the github repo for the series here

whatisnewinsqlserver2016, all the code for this post can be found here

TruncatePartitions

New in SQL Server is the ability to truncate a partition or more than 1 partition, this is very nice because we all know that truncate is much faster than a delete. Let's see how this all works

First create this simple table

CREATE TABLE SalesPartitioned(

YearCol SMALLINT NOT NULL,

OrderID INT NOT NULL,

SomeData UNIQUEIDENTIFIER DEFAULT newsequentialid())

GO

Now it is time to insert some data, we are going to create data for 6 years, we will use those years then to partition the data on. This query will insert 6 times 2048 rows, 2048 rows per year

INSERT SalesPartitioned (YearCol,OrderID)

SELECT 2013,number

FROM master..spt_values

WHERE type = 'P'

UNION ALL

SELECT 2014,number + 2048

FROM master..spt_values

WHERE type = 'P'

UNION ALL

SELECT 2015,number + 4096

FROM master..spt_values

WHERE type = 'P'

UNION ALL

SELECT 2016,number + 6144

FROM master..spt_values

WHERE type = 'P'

UNION ALL

SELECT 2017,number + 8192

FROM master..spt_values

WHERE type = 'P'

UNION ALL

SELECT 2018,number + 10240

FROM master..spt_values

WHERE type = 'P'

Now let's create the partition function

CREATE PARTITION FUNCTION pfFiscalYear(SMALLINT)

AS RANGE LEFT FOR VALUES(2013,2014,2015,2016,2017)

GO

Create the partition scheme

CREATE PARTITION SCHEME psFiscalYear

AS PARTITION pfFiscalYear ALL TO ([PRIMARY])

GO

Add a primary key to the table, add it to the partition scheme we created above

ALTER TABLE dbo.SalesPartitioned ADD CONSTRAINT

PK_Sales PRIMARY KEY CLUSTERED (YearCol,OrderID)

ON psFiscalYear(YearCol)

GO

Now let's see what we have as far as counts for each partition

SELECT partition_number,rows

FROM sys.partitions

WHERE object_id = OBJECT_ID('SalesPartitioned')

Here are the results

partition_number

rows

1

2048

2

2048

3

2048

4

2048

5

2048

6

2048

As you can see we have 6 partitions, each partition has 2048 rows

We can verify this by running a count ourselves

SELECT YearCol, $PARTITION.pfFiscalYear(YearCol) AS Partition,

COUNT(*) AS [COUNT] FROM SalesPartitioned

GROUP BY $PARTITION.pfFiscalYear(YearCol),YearCol

ORDER BY Partition;

GO

YearCol

Partition

COUNT

2013

1

2048

2014

2

2048

2015

3

2048

2016

4

2048

2017

5

2048

2018

6

2048

Now it is time to truncate a partition.

In order to truncate a partition, you use the following syntax

TRUNCATE TABLE ........

WITH ( PARTITIONS ( { <partition_number_expression> | <range> } [ , ...n ] ) )

So if we just want to truncate partition 2, we would execute the following

TRUNCATE TABLE SalesPartitioned

WITH (PARTITIONS (2));

GO

Checking those same count queries from before shows that partition 2 has no rows after we executed the truncate command

SELECT partition_number,rows

FROM sys.partitions

WHERE object_id = OBJECT_ID('SalesPartitioned')

partition_number

rows

1

2048

2

0

3

2048

4

2048

5

2048

6

2048

SELECT YearCol, $PARTITION.pfFiscalYear(YearCol) AS Partition,

COUNT(*) AS [COUNT] FROM SalesPartitioned

GROUP BY $PARTITION.pfFiscalYear(YearCol),YearCol

ORDER BY Partition;

GO

YearCol

Partition

COUNT

2013

1

2048

2015

3

2048

2016

4

2048

2017

5

2048

2018

6

2048

There are two ways you can truncate a bunch of partitions, for example if you want to remove 4,5 and 6, you can use WITH (PARTITIONS (4, 5, 6)) or you can use a range like shown in the code below

TRUNCATE TABLE SalesPartitioned

WITH (PARTITIONS (4 TO 6));

GO

After we execute that and we check the counts again, we see that we are now only left with partitions 1 and 3

SELECT partition_number,rows

FROM sys.partitions

WHERE object_id = OBJECT_ID('SalesPartitioned')

Here are the results from that query

partition_number

rows

1

2048

2

0

3

2048

4

0

5

0

6

0

SELECT YearCol, $PARTITION.pfFiscalYear(YearCol) AS Partition,

COUNT(*) AS [COUNT] FROM SalesPartitioned

GROUP BY $PARTITION.pfFiscalYear(YearCol),YearCol

ORDER BY Partition;

GO

Here are the results

YearCol

Partition

COUNT

2013

1

2048

2015

3

2048

What happens if you specify an invalid partition in your truncate statement?

TRUNCATE TABLE SalesPartitioned

WITH (PARTITIONS (12));

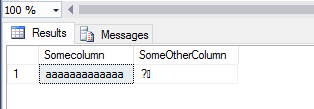

You get the following message

Msg 7722, Level 16, State 2, Line 1

Invalid partition number 12 specified for table 'SalesPartitioned', partition number can range from 1 to 6.

There you have it, this is how truncate partitions work

You can find the github repo for the series here

whatisnewinsqlserver2016, all the code for this post can be found here

TruncatePartitions