Aha... I have plenty of war stories with Excel so I said, it's probably some non printable character that is in the column.. either a tab (char(9)) or a non breaking space (char(160))..especially if the value was copied from the internet

He said isnumeric was returning 0 for rows that looked valid, I then told him to run this query on those rows

SELECT ASCII(LEFT(SomeColumn,1)), ASCII(RIGHT(SomeColumn,1)),* FROM StagingData s

That would give them the ascii numerical value. For example a tab is 9, linefeed = 10....

Here is a chart for the characters between 0 and 32

| Binary | Oct | Dec | Hex | Abbreviation | [b] | [c] | [d] | Name (1967) | ||

|---|---|---|---|---|---|---|---|---|---|---|

| 1963 | 1965 | 1967 | ||||||||

| 000 0000 | 000 | 0 | 00 | NULL | NUL | ␀ | ^@ | \0 | Null | |

| 000 0001 | 001 | 1 | 01 | SOM | SOH | ␁ | ^A | Start of Heading | ||

| 000 0010 | 002 | 2 | 02 | EOA | STX | ␂ | ^B | Start of Text | ||

| 000 0011 | 003 | 3 | 03 | EOM | ETX | ␃ | ^C | End of Text | ||

| 000 0100 | 004 | 4 | 04 | EOT | ␄ | ^D | End of Transmission | |||

| 000 0101 | 005 | 5 | 05 | WRU | ENQ | ␅ | ^E | Enquiry | ||

| 000 0110 | 006 | 6 | 06 | RU | ACK | ␆ | ^F | Acknowledgement | ||

| 000 0111 | 007 | 7 | 07 | BELL | BEL | ␇ | ^G | \a | Bell | |

| 000 1000 | 010 | 8 | 08 | FE0 | BS | ␈ | ^H | \b | Backspace[e][f] | |

| 000 1001 | 011 | 9 | 09 | HT/SK | HT | ␉ | ^I | \t | Horizontal Tab[g] | |

| 000 1010 | 012 | 10 | 0A | LF | ␊ | ^J | \n | Line Feed | ||

| 000 1011 | 013 | 11 | 0B | VTAB | VT | ␋ | ^K | \v | Vertical Tab | |

| 000 1100 | 014 | 12 | 0C | FF | ␌ | ^L | \f | Form Feed | ||

| 000 1101 | 015 | 13 | 0D | CR | ␍ | ^M | \r | Carriage Return[h] | ||

| 000 1110 | 016 | 14 | 0E | SO | ␎ | ^N | Shift Out | |||

| 000 1111 | 017 | 15 | 0F | SI | ␏ | ^O | Shift In | |||

| 001 0000 | 020 | 16 | 10 | DC0 | DLE | ␐ | ^P | Data Link Escape | ||

| 001 0001 | 021 | 17 | 11 | DC1 | ␑ | ^Q | Device Control 1 (often XON) | |||

| 001 0010 | 022 | 18 | 12 | DC2 | ␒ | ^R | Device Control 2 | |||

| 001 0011 | 023 | 19 | 13 | DC3 | ␓ | ^S | Device Control 3 (often XOFF) | |||

| 001 0100 | 024 | 20 | 14 | DC4 | ␔ | ^T | Device Control 4 | |||

| 001 0101 | 025 | 21 | 15 | ERR | NAK | ␕ | ^U | Negative Acknowledgement | ||

| 001 0110 | 026 | 22 | 16 | SYNC | SYN | ␖ | ^V | Synchronous Idle | ||

| 001 0111 | 027 | 23 | 17 | LEM | ETB | ␗ | ^W | End of Transmission Block | ||

| 001 1000 | 030 | 24 | 18 | S0 | CAN | ␘ | ^X | Cancel | ||

| 001 1001 | 031 | 25 | 19 | S1 | EM | ␙ | ^Y | End of Medium | ||

| 001 1010 | 032 | 26 | 1A | S2 | SS | SUB | ␚ | ^Z | Substitute | |

| 001 1011 | 033 | 27 | 1B | S3 | ESC | ␛ | ^[ | \e[i] | Escape[j] | |

| 001 1100 | 034 | 28 | 1C | S4 | FS | ␜ | ^\ | File Separator | ||

| 001 1101 | 035 | 29 | 1D | S5 | GS | ␝ | ^] | Group Separator | ||

| 001 1110 | 036 | 30 | 1E | S6 | RS | ␞ | ^^[k] | Record Separator | ||

| 001 1111 | 037 | 31 | 1F | S7 | US | ␟ | ^_ | Unit Separator | ||

Source: https://en.wikipedia.org/wiki/ASCII

He then ran the following to grab all the rows that ended or started with tabs

SELECT * FROM StagingData s WHERE LEFT(SomeColumn,1) = char(9) OR RIGHT(SomeColumn,1) = char(9)

So let's take another look at this to see how we can make this a little better

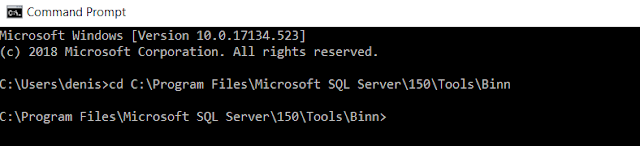

Let's create a table that will hold these bad characters that we don't want, in my case ACII values 1 untill 32

Here is what we will do to create and populate the table

CREATE TABLE BadCharacters( BadChar char(1) NOT NULL, ASCIINumber int NOT NULL, CONSTRAINT pk_BadCharacters PRIMARY KEY CLUSTERED( BadChar ) ) GO INSERT BadCharacters SELECT char(number),number FROM master..SPT_VALUES WHERE type = 'P' AND number BETWEEN 1 AND 32 OR number = 160

A quick look at the data looks like thisSELECT * FROM BadCharacters

Now let's create our staging table and insert some data so that we can do some tests

CREATE TABLE StagingData (SomeColumn varchar(255) ) INSERT StagingData SELECT CONVERT(VARCHAR(10),s1.number) + '.' + CONVERT(VARCHAR(10),s2.number) FROM master..SPT_VALUES s1 CROSS JOIN master..SPT_VALUES s2 WHERE s1.type = 'P' AND s2.type = 'P'

That inserted 4194304 rows on my machine

Time to insert some of that bad data

Here is what some of the data inserted will look like

2.1

2.2

2.3

2.4

2.5

2.6

2.7

2.8

And this is the query to generated and insert those bad rows, on my machine it generated 1089 such rows

;WITH cte as(SELECT CONVERT(VARCHAR(10),number) as num FROM master..SPT_VALUES WHERE type = 'P' AND number BETWEEN 1 AND 1000) --INSERT StagingData SELECT b.BadChar + c1.num + '.' + c2.num + b2.BadChar FROM cte c1 CROSS JOIN cte c2 JOIN BadCharacters b on c1.num = b.ASCIINumber JOIN BadCharacters b2 on c2.num = b2.ASCIINumberThe query create a value by using a bad value, a number a dot a number and a bad value, you can see those values above

Now it's time to find these bad rows, but before we do that, let's add an index

CREATE INDEX ix_StagingData on StagingData(SomeColumn)

OK, we are ready...

Of course I here you saying, why don't we just do this

SELECT * FROM StagingData WHERE TRY_CONVERT(numeric(20,10),SomeColumn) IS NULL

Before we start, let's set statistics io on so that we can look at some performance

SET STATISTICS IO ON GO

Here are the queries to find the bad characters at the start

SELECT * FROM StagingData s JOIN BadCharacters b on b.BadChar = LEFT(s.SomeColumn,1) SELECT * FROM StagingData s JOIN BadCharacters b on s.SomeColumn like b.BadChar +'%'

Here is what the reads look like

(1089 row(s) affected)

Table 'BadCharacters'. Scan count 1, logical reads 2, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Table 'Worktable'. Scan count 0, logical reads 0, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Table 'StagingData'. Scan count 9, logical reads 10851, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

(1089 row(s) affected)

Table 'BadCharacters'. Scan count 1, logical reads 2, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Table 'StagingData'. Scan count 33, logical reads 135, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Table 'Worktable'. Scan count 0, logical reads 0, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

As you can see from the stats, the top query is non-SARGable and generates a lot more reads, the bottom query can use the index. Always make sure to write your queries in a way so that SQL Server can you an index

What about the last character, how can we find those

SELECT * FROM StagingData s JOIN BadCharacters b on b.BadChar = RIGHT(s.SomeColumn,1) SELECT * FROM StagingData s JOIN BadCharacters b on s.SomeColumn like +'%' + b.BadChar

Here are the stats again

(1089 row(s) affected)

Table 'BadCharacters'. Scan count 1, logical reads 2, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Table 'Worktable'. Scan count 0, logical reads 0, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Table 'StagingData'. Scan count 9, logical reads 10851, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

(1089 row(s) affected)

Table 'BadCharacters'. Scan count 1, logical reads 2, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Table 'StagingData'. Scan count 33, logical reads 445863, physical reads 0, read-ahead reads 13, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Table 'Worktable'. Scan count 0, logical reads 0, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

So both of these queries suck the life out of your SQL Server instance, so what can be done?

One thing we can do is add a computed column to the table that will hold just the last character of the column, then we can index the computed column

Here are the commands to do that

ALTER TABLE StagingData ADD RightChar as RIGHT(SomeColumn,1) GO CREATE INDEX ix_RightChar on StagingData(RightChar) GO

And now we can just run the same queries again

SELECT * FROM StagingData s JOIN BadCharacters b on b.BadChar = RIGHT(s.SomeColumn,1) SELECT * FROM StagingData s JOIN BadCharacters b on s.SomeColumn like +'%' + b.BadChar

Here are the stats

(1089 row(s) affected)

Table 'StagingData'. Scan count 33, logical reads 1223, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Table 'BadCharacters'. Scan count 1, logical reads 2, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

(1089 row(s) affected)

Table 'StagingData'. Scan count 33, logical reads 1223, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Table 'BadCharacters'. Scan count 1, logical reads 2, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

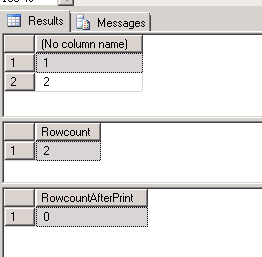

Did you expect to get the same exact reads for both queries?

So what is going on? Well lets take a look

In both cases, the optimizer was smart enough to use the index on the computed column

Hopefully this will make someone's life easier and you can expand the table to add other character you consider bad. You can also add constraint to reject values or you can add triggers and then move those bad rows to a bad rows table

Finally if you need to worry about unicode you might want to change the table to be nvarchar

Enjoy.. importing that data..... we all know..it's only getting bigger and bigger