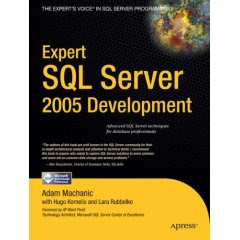

If you are a SQL developer then you know who Itzik Ben-Gan is. If for some strange reason you don’t know then listen up. Itzik is a SQL Server MVP, he writes a monthly column for SQL Server Magazine and he is also a principal mentor and founder of Solid Quality Learning. You can also find him in the SQL Server programming public newsgroups. If you browse the MSDN site you will find several articles written by Itzik.

I purchased Inside Microsoft SQL Server 2005:T-SQL Querying and must say it is my favorite SQL Server 2005 book so far. Chapters 3, 6 and 9 alone are worth the money for the book. I have posted a link to a sample chapter (6) at the end of this post if you are interested to learn more about the style of writing and just to see how awesome this book really is.

Let’s get started with the interview

Q. What is the target audience for this book?

A. T-SQL developers with intermediate to advanced background.

By the way, the book is actually split to two volumes:

Inside Microsoft SQL Server 2005: T-SQL Querying

and

Inside Microsoft SQL Server 2005: T-SQL Programming

. Originally I intended to write one book, but I ended up with over 1200 pages; so I had to split it to two. But you should consider them as volumes 1 and 2 of one book. T-SQL Querying should be read before T-SQL Programming.

Also, I’d like to mention that several people contributed to the books, and I had great pleasure working with them. Lubor Kollar wrote Chapter 2 - Physical Query Processing of the T-SQL Querying volume and also provided great help and advice. Dejan Sarka wrote the CLR and XML content and explained what they have to do with the relational model. Roger Wolter wrote Chapter 11 - Service Broker of the T-SQL Programming volume. Steve Kass was the technical editor of the books, but contributed way more than what you would normally expect from technical editors. You can read about the contributors here:

http://www.insidetsql.com/

Q. What new technologies in SQL Server 2005 do you think are the most beneficial for developers?

A. I don’t think that it would be right of me to name two technologies in general, rather, depending on need. For example, for applications that involve XML manipulation, developers will find XML enhancements beneficial (and there are major ones in the product). For applications that need to implement at the database computationally intensive calculations, complex calculations, iterative logic, string manipulations, parsing, and so on, developers will find CLR integration beneficial. For applications that need queuing support, developers will find the new queuing infrastructure and the service broker platform beneficial. And then there’s row versioning embedded in the engine supporting the new snapshot isolations and other aspects of SQL Server; programmers will find this technology beneficial for certain types of systems that suffer from concurrency problems.

As for things that are closer to my heart; in regards to T-SQL, the two new features that I find most beneficial for developers are the OVER clause (e.g., with ranking calculations) and Common Table Expressions (CTE).

The OVER clause is really profound; I feel that it helps bridging the gap (or maybe I should say abyss) between cursors and sets. The OVER clause wasn’t implemented fully in SQL Server 2005, but even the existing implementation (especially with ranking calculations) allows simplifying and optimizing many pre-2005 solutions.

As for CTEs, they have both nonrecursive and recursive forms. The nonrecursive form has several important advantages over derived tables, allowing less code and better code maintenance. The recursive form allows for the first time in SQL Server’s history to write recursive queries, and very elegant ones. These are especially useful in manipulation of graphs (e.g., employee organizational chart, bill of materials, etc.).

Q. What will a person who reads this book gain in terms of understanding how the query engine works?

A. The approach I took in the books was first to understand logical query processing, then physical query processing (the engine), and then tackle practical problems where you need to combine your knowledge in both. It’s a problem based approach; I didn’t attempt to explain the engine for the sake of understanding how the engine works; rather to look at common practical problems developers face, write several solutions to each problem, analyze how the engine processes the solutions, optimize their performance, and simplify them. Ideal solutions are those that are both simple and perform well. A complex solution may have the side-effect of making the author proud of the ability to write such a solution, but is more prone to bugs and obviously involves maintenance overhead. The real beauty is in simple solutions that also perform well.

Regarding querying logic, the books put a lot of emphasis on correct understanding of SQL and thinking in terms of sets; this is a very though phase that developers have to go through since for most of us mere mortals it’s not a natural way of thinking.

Q. What are the most important things a person can do to master Transact-SQL?A. The quickest way is to issue the following code:

USE master ;-)

On a more serious note, I believe that there’s no quick way—there’s a way; it’s an ongoing thing. I can give recommendations based on what I try to follow. But some aspects of the way are personal; different things may work differently for different people. Anyway, my two cents worth…

Most importantly, you need to master the basics; or maybe I should use the term fundamentals. Ego and vanity can be big obstacles here. People try to jump directly to what they consider “advanced” and don’t get the fundamentals right. Advanced techniques are a matter of combining fundamental techniques. So be diligent and invest a lot of effort in understanding the fundamentals well. In terms of querying logic this means logical query processing, sets, NULLs, three-valued-logic, all-at-once operations, and so on. In terms of physical processing, this means getting to know internal structures, access methods, analyzing execution plans, and so on.

When facing new problems, try to solve them yourself before looking at others’ solutions. When you try something yourself you understand it best. This will also help you become more creative and develop your own techniques. Also, try different approaches, not just one, and compare the solutions.

Never consider yourself as being finished. Strive for perfection but never consider your solution perfect. Constantly work on problem solving; look for new problems and keep going back to old problems and try to improve the solutions. This way you polish and perfect your techniques and enhance your vocabulary.

I also feel that you get a much better understanding of a subject when you need to explain it to others.

I truly believe in all of the above, but ideally, you don’t need me to tell you these things. In fact I feel awkward and uncomfortable giving such recommendations; so if at this point you think that I’m a fart I won’t hold it against you. I feel more comfortable writing queries and talking about them. ;-)

As I said earlier, this simply feels natural to me. Though some things are common in the way to master any profession, some things are and should be personal; namely, you have to find your own way and not necessarily follow what someone else recommends to the letter. :-)

Q. Itzik , the first time I came across your name was in the Microsoft public SQL Server programming group. Can you explain to us why you participate in newsgroups and forums?

A. In the past I used to be much more active in the newsgroups; today less, but whenever I participate I feel revived—especially when visiting the public programming newsgroup. If I had to name one thing that had the greatest impact on my knowledge in T-SQL it would doubtless be my participation in the newsgroups. It’s a very fertile soil to grow. So many practical problems are posted there daily, and by trying to solve other people’s problems, you end up learning more and more. Also, many practical problems are repeated there frequently, and as time goes by, you keep perfecting your techniques, and also sometimes get to see very unique and interesting solutions posted by other people. People pay so much money to study in schools, colleges and universities; here’s a great place to learn for free, and while you’re at it, you end up helping other people. :-) It’s the perfect Dojo for T-SQL practitioners.

Q. How did the massive changes between SQL Server 2000 and SQL Server 2005 affect the research for your book?

A. I think I went through a different experience than some other authors. I’m so immersed in the T-SQL language that I feel that it’s my mother tongue. Seriously, in many cases I feel that I can express myself better with T-SQL than with English or even Hebrew which is supposed to be my native tongue. I was deeply involved in writing T-SQL code using new language elements from the very early builds of Yukon. Also, our company, Solid Quality Learning, works closely with the SQL Server development team and customer advisory team (CAT). We worked on whitepapers for beta builds and got access to specs in very early stages of the product. I also developed and delivered courses on Advanced T-SQL before writing the books. So writing the books did not involve research, rather the material was essentially part of me, and all I had to do was to convert my thoughts to words.

I have similar experience with writing in general. I have a monthly T-SQL column in SQL Server Magazine, and often people ask me where do I get ideas, and what’s the process involved. I work on so many ideas constantly not for the sake of writing, rather, it’s a natural part of me—the way I was talking about earlier. So when it’s time to write a column, it’s basically pulling something from one of the drawers in my mind and putting it into words.

Q. Name three things that you wish would be in Katmai (the next version of SQL Server)?

A. These are easy to name, but I doubt that my wishes will come true in Katmai:

1. Support the ORDER BY sub-clause of the OVER clause for aggregate functions; BTW, this has nothing to do with ordered aggregates, rather it’s a logical way to define to a window function which window to work with. I’ll provide a link to a paper which I wrote recently for people looking for more details.

2. Support the ROWS and RANGE windowing sub-clauses of the OVER clause for aggregate functions.

3. Add support for row value constructors/vector expressions.

In general I wish to see more focus on T-SQL enhancements, and specifically, a more complete implementation of the profound OVER clause. It bears great benefits within it that I believe many people are not aware of yet. You can find more details in the following paper:

http://www.insidetsql.com/OVER_Clause_and_Ordered_Calculations.doc

Q. Do you think that every developer should have a numbers table in their database?

A. That’s a good question that makes me smile. :-) Yes! If not a table, a table function. I’ve used an auxiliary table of numbers in so many solutions; I find it a great helper tool to solve many querying problems. Just as an example, here’s a query using an auxiliary table of numbers (called Nums with a column called n) that splits strings containing comma separated lists of values (stored in a table called Arrays, with columns arrid and string):

SELECT

arrid,

n - LEN(REPLACE(LEFT(string, n), ',', '')) + 1 AS pos,

SUBSTRING(string, n,

CHARINDEX(',', string + ',', n) - n) AS val

FROM Arrays JOIN Nums

ON n <= LEN(string) AND SUBSTRING(',' + string, n, 1) = ','

ORDER BY arrid, pos;

And if you need to operate on a single array (e.g., one that you get as an input to a stored procedure), you can encapsulate the logic in an inline table function:

CREATE FUNCTION dbo.fn_split(@arr AS VARCHAR(MAX)) RETURNS TABLE

AS

RETURN

SELECT

n - LEN(REPLACE(LEFT(@arr, n), ',', '')) + 1 AS pos,

SUBSTRING(@arr, n,

CHARINDEX(',', @arr + ',', n) - n) AS element

FROM dbo.Nums

WHERE n <= LEN(@arr) AND SUBSTRING(',' + @arr, n, 1) = ',';

GO

SELECT * FROM dbo.fn_split('10248,10249,10250');

Output:

pos element

---- --------

1 10248

2 10249

3 10250

This implementation has major advantages (both in terms of security and performance) over the commonly practiced solution utilizing dynamic SQL.

Erland Sommarskog, a fellow MVP, wrote a great paper on the subject. You can find details here:

http://www.sommarskog.se/arrays-in-sql.html

By the way, you can vote for adding the table of numbers as part of the product here:

https://connect.microsoft.com/SQLServer/feedback/ViewFeedback.aspx?FeedbackID=258733

As a tip, here’s a very fast inline table function that will return a table of numbers for you:

CREATE FUNCTION dbo.fn_nums(@n AS BIGINT) RETURNS TABLE

AS

RETURN

WITH

L0 AS(SELECT 1 AS c UNION ALL SELECT 1),

L1 AS(SELECT 1 AS c FROM L0 AS A, L0 AS B),

L2 AS(SELECT 1 AS c FROM L1 AS A, L1 AS B),

L3 AS(SELECT 1 AS c FROM L2 AS A, L2 AS B),

L4 AS(SELECT 1 AS c FROM L3 AS A, L3 AS B),

L5 AS(SELECT 1 AS c FROM L4 AS A, L4 AS B),

Nums AS(SELECT ROW_NUMBER() OVER(ORDER BY c) AS n FROM L5)

SELECT n FROM Nums

WHERE n <= @n;

GO

-- Test function

SELECT * FROM dbo.fn_nums(10) AS F;

GO

Output:

n

---

1

2

3

4

5

6

7

8

9

10

It’s even faster than querying a real table of numbers. ;-)

Q. Why is it important to think in sets instead of procedural code?A. SQL is based on the relational model, which in turn is based on set-theory and predicate logic. The relational model is a proven model for handling data—for several decades. It’s important to adopt set-based thinking in order to write simple and efficient solutions, otherwise, you defeat the whole premise on which the model is based. SQL queries (set-based) have several advantages over procedural/iterative code (e.g., using cursors) as far as data manipulation is concerned. Set-based solutions focus on the “what” part of the solution; namely their logic. Iterative solutions spend a lot of code on the “how” part, clouding the logic behind all the code; lengthy code naturally has maintenance overhead. Set-based solutions allow the optimizer to choose an execution plan among several possible plans based on cost estimations, while iterative solutions typically force a very rigid plan. In short, set-based solutions allow you to focus on logic, and speak with the database in its native language. Also, cursors have a lot of overhead associated with the record-by-record manipulation.

I’m not saying that ALL problems are best solved with set-based solutions, but the majority of data manipulation related problems are. Tasks that involve less data manipulation, and more computations, string manipulation, parsing, inherently iterative logic, these can benefit from procedural solutions. But T-SQL (and SQL in general) was not designed to handle such tasks efficiently. That’s an area where .NET is much stronger, richer and more efficient. These are the areas where I see the benefit in the CLR integration in SQL Server 2005.

Q. Which chapter was the hardest to write and can you explain why?

A. Chapter 3 – Query Tuning in the T-SQL Querying volume. It was the hardest for several reasons.

In this chapter I cover internal structures and access methods. It was very important for me to depict internal structures and diagrams of access methods graphically as accurately and as detailed as possible. I created many figures and worked on each for many hours at the pixel level. Several figures took a day of work each. I actually took snapshots of real internal structures like pages of B-Trees with DBCC PAGE and DBCC IND commands, and incorporated real bits of data in the diagrams to make them as reliable as possible.

Also, the chapter appears early in the first volume. I wanted it early in the books to provide important foundations and background that is required for the rest of the books. But I had to include queries used for performance analysis that use advanced techniques that I explain later in the book.

There were also other obstacles.

This was the chapter I wrote last, and it took me several very draining weeks to complete. It ended up with over 100 pages. There’s a lot of query tuning coverage throughout the books, but it’s not the tuning part that was hard, rather the aforementioned reasons.

Q. Why do you write technical books?

A. I’m a teacher in heart and sole. I think that writing is an integral part of teaching. It’s a flowing river; you acquire knowledge and you pass on your knowledge to others. It’s very hard and requires a lot of discipline, but ultimately it’s a process from which you also gain a lot.

Also, there’s a more selfish reason. As I said, I’m a teacher and I want students in my classes. Books give you exposure and I hoped the books would get more students in my courses.

Q. Who are your favorite authors?

A. JRR Tolkien, William Goldman, Douglas Adams, Edgar Allan Poe, Niccolo Machiavelli, Yamamoto Tsunetomo, Miyamoto Musashi, Sun Tzu, J.K. Rowling

By the way, some of my favorite authors did not write any books, yet. :)

Here are a few examples:

David Campbell, who is the General Manager of Strategy, Infrastructure and Architecture of Microsoft SQL Server used to participate in the past in some private SQL newsgroups back in the days when he owned SQL Server’s Storage Engine. His depth of knowledge, teaching and explanatory skills, passion, humility, great English, all were evident in his posts. I asked him to write the foreword to the T-SQL Querying book; you have to read it to understand what I’m talking about. It’s pure joy to read his writings, and I sorely miss his participation in the newsgroups. I wish he had written books back in the days when he was very deeply involved in the technicalities and small details of SQL Server’s engine.

Roy Harvey, a fellow SQL Server MVP. He’s active in the SQL Server community especially in the SQL Server Programming newsgroup. When you read his posts you feel the experience, wisdom and humility flowing from them. I would have very much loved to see work published by him, but you know, some very wise and knowledgeable people shy away from publicity and exposure—unfortunately for us.

Steve Kass is another good example. Steve was the technical editor of my books and there are no words that I can use to describe his capabilities. He has a very unique combination of knowledge, logic, mathematics, SQL, genius, perfect English, and a way of explaining things in a simple manner. Oh, how much I’d love to see his work published in books some day!

I also like reading posts by David Portas; another fellow MVP. I’d love to see a book on modeling published by him some day.

Q. What SQL Server books are on your bookshelf?

A. With your permission, I’d like to broaden my reply to books covering topics that I believe are important for SQL practitioners. On my bookshelf you will find The Art of Computer Programming by Donald E. Knuth, books about logic puzzles, The Thirteen Books of The Elements by Euclid, Elements of Set Theory, Graph Theory, Regular Expressions Pocket Reference by Tony Stubblebine, Mastering Regular Expressions by Jeffrey E. F. Friedl, several SQL books by Joe Celko, SQL-99 Complete, Really by Gultzan and Peltzer, Inside Microsoft SQL Server (various versions; early by Ron Soukup, later by Kalen Delaney), The Art of SQL by Stephane Faroult with Peter Robson, and others…

You can find a more extensive list here:

http://www.sql.co.il/books/insidetsql2005/resources.htm#Books

I have to say though that I probably spend more time writing stuff than reading…

Q. I know you like math and logic puzzles, do you think it is beneficial for a SQL developer to be somewhat good in math?

A. Yes, but I would say it is beneficial to be good in logic and not necessarily generally in math. That’s why I included a whole appendix with pure logic puzzles in the T-SQL Querying volume. Some people find it odd, while people that truly follow the SQL way find it natural (at least that’s what I believe). Almost every SQL querying problem in its hart is a logic puzzle. It’s very common to see that people who are deeply immersed in SQL are also very fond of logic puzzles. It’s not by chance. My belief is that if you practice logic puzzles you can improve your SQL. Also, logic puzzles are fun and can be practiced by the whole family.

And there’s another reason to dwell on logic—one coined by Sir Arthur Conan Doyle:

"Crime is common. Logic is rare. Therefore it is upon the logic rather than upon the crime that you should dwell."