https://www.postgresql.org/about/news/2040/

One thing that caught my eye was this statement in the release notes

PostgreSQL 13 brings more convenience to writing queries with features like FETCH FIRST WITH TIES, which returns any additional rows that match the last row.

This is I guess exactly like TOP WITH TIES in SQL Server. I believe this has been around in SQL Server since at least version 7. How many times have I used it in code that was deployed in the last 20 years? I believe I have used WITH TIES only once. It does make for great interview questions and SQL puzzles :-)

So let's take a quick look at how TOP WITH TIES works in SQL Server. The first thing we will do is look at what Books On Line says about TOP

WITH TIES Returns two or more rows that tie for last place in the limited results set. You must use this argument with the ORDER BY clause. WITH TIES might cause more rows to be returned than the value specified in expression. For example, if expression is set to 5 but two additional rows match the values of the ORDER BY columns in row 5, the result set will contain seven rows.You can specify the TOP clause with the WITH TIES argument only in SELECT statements, and only if you've also specified the ORDER BY clause. The returned order of tying records is arbitrary. ORDER BY doesn't affect this rule.

Time to get started and write some code to see this in action

First create this table of students and insert some data

CREATE TABLE #TopExample(GradeAverage int, Student varchar(100)) INSERT #TopExample VALUES(99.00,'Plato'), (98,'Socrates'), (95,'Diogenes the Cynic'), (94,'Antisthenes'), (94,'Demetrius'), (50,'Denis')

As you can see, I am not a very good student :-(

If you do a regular TOP 4 query like this

SELECT TOP 4 GradeAverage, Student FROM #TopExample ORDER BY GradeAverage DESC

You will get back these results

GradeAverage Student 99 Plato 98 Socrates 95 Diogenes the Cynic 94 Demetrius

As you can see we are missing another student with a grade of 94, this is Antisthenes

This is easily fixed by adding WITH TIES to the query

SELECT TOP 4 WITH TIES GradeAverage, Student FROM #TopExample ORDER BY GradeAverage DESC

Now, you will get back these results, as you can see, you now have 5 rows and both rows with a grade average of 94 are included

GradeAverage Student 99 Plato 98 Socrates 95 Diogenes the Cynic 94 Demetrius 94 Antisthenes

Another way to do the same as WITH TIES is by using DENSE_RANK. That query looks like this

;WITH c AS (SELECT DENSE_RANK() OVER (ORDER BY GradeAverage DESC) AS dens, GradeAverage,Student FROM #TopExample) SELECT GradeAverage, Student FROM c WHERE dens <=4 ORDER BY GradeAverage DESC

You will get back these same results again, you now have 5 rows and both rows with a grade average of 94 are included as well

GradeAverage Student 99 Plato 98 Socrates 95 Diogenes the Cynic 94 Demetrius 94 Antisthenes

Using DENSE_RANK is bit more code, but if portability is a concern, it might be a better choice

There you go a post about a feature you will never use :-)

If you want to run all the queries in one shot here is all the code

CREATE TABLE #TopExample(GradeAverage int, Student varchar(100)) INSERT #TopExample VALUES(99.00,'Plato'), (98.00,'Socrates'), (95.00,'Diogenes the Cynic'), (94.00,'Antisthenes'), (94.00,'Demetrius'), (50.00,'Denis') SELECT TOP 4 GradeAverage, Student FROM #TopExample ORDER BY GradeAverage DESC SELECT TOP 4 WITH TIES GradeAverage, Student FROM #TopExample ORDER BY GradeAverage DESC ;WITH c AS (SELECT DENSE_RANK() OVER (ORDER BY GradeAverage DESC) AS dens, GradeAverage,Student FROM #TopExample) SELECT GradeAverage, Student FROM c WHERE dens <=4 ORDER BY GradeAverage DESC DROP TABLE #TopExample

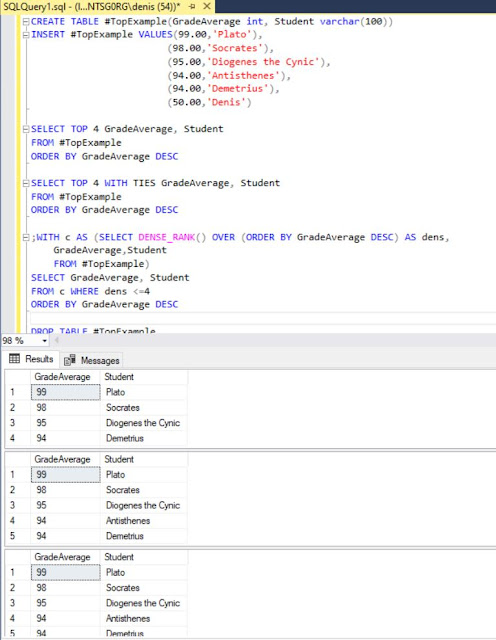

And here is what it all looks like in SSMS, code and output