This is just a quick and fun post.

I was troubleshooting a deadlock the other day and it got me thinking.... I know the number 1205 by heart and know it is associated to a deadlock. What other numbers are there that you can associate to an event or object or limitation. For example 32767 will be known by a lot of people as the database id of the ResourceDb, master is 1, msdb is 4 etc etc.

So below is a list of numbers I thought of

Leave me a comment with any numbers that you know by heart

BTW I didn't do the limits for int, smallint etc etc, those are the same in all programming languages...so not unique to SQL Server

-1

You use -1 with DBCC TRACESTATUS to see what trace flags are enabled on your system

For example on a brand new instance, I turned on these 3 trace flags, then when I check tracestatus, I get them back in the output

DBCC TRACEON (3605,1204,1222,-1)

DBCC TRACESTATUS(-1)

TraceFlag

Status

Global

Session

1204

1

1

0

1222

1

1

0

3605

1

1

0

1

You can only have 1 clustered index per table. This is also a favorite interview question, asking people to explain why there can only be 1 clustered index

3

The smallest fraction second number in a datetime datatype is 3

Fractions of a seconds are rounded to increments of .000, .003, or .007 seconds

This means the value after 000 midnight is .003 seconds

Take a look at this

DECLARE @d DATETIME = '2019-03-19 23:59:59.997'

SELECT @d AS orig,

dateadd(ms,1,@d) AS orig1ms,

dateadd(ms,2,@d) AS orig2ms,

dateadd(ms,3,@d) AS orig3ms,

dateadd(ms,4,@d) AS orig4ms,

dateadd(ms,5,@d) AS orig5ms

Output

2019-03-19 23:59:59.997

2019-03-19 23:59:59.997

2019-03-20 00:00:00.000

2019-03-20 00:00:00.000

2019-03-20 00:00:00.000

2019-03-20 00:00:00.003

This is also the reason you will see datetimes in queries ending in the following values for the time portion '23:59:59.997'. It will mostly be used with BETWEEN

For example

SELECT ...

FROM SomeTable

WHERE SomeDAte BETWEEN '2019-03-19' and '2019-03-19 23:59:59.997'

WHICH of course is the same as the query below

SELECT ...

FROM SomeTable

WHERE SomeDAte >='2019-03-19'

AND SomeDAte < '2019-03-20'

But it's less typing to use between :-)

Another one with the number 3 is the /3GB flag you could set in the boot.ini file. In that case if you had a 32 bit 4 GB system, SQL Server could now use 3GB instead of only 2GB.... oh the good old times :-)

10

STATS = 10

When you script out a BACKUP or RESTORE command, it will by default use STATS =10, so every 10% you will get a message like below

10 percent processed.

20 percent processed.

30 percent processed.

40 percent processed.

50 percent processed.

For big databases, I like to use STATS = 1

15

If you have been using SQL Server for a while, you might see this in the error log

SQL Server has encountered 1 occurrence(s) of I/O requests taking longer than 15 seconds to complete on file [D:\SomeFilename.ldf] in database

The OS file handle is 0x0000000000000950. The offset of the latest long I/O is: 0x000000545eb200

There are several reasons why this might happen

1. SQL Server is spawning more I/O requests than what the I/O disk subsystem could handle.

2 . There could be an issue with the I/O subsystem (or) driver/firmware issue (or) Misconfiguration in the I/O Subsystem (or) Compression is turned on, so the Disks are performing very slow and thus SQL Server is affected by this

3. Some other process on the system is saturating the disks with I/O requests. Common application includes AV Scan,System Backup Etc.

50

Session ids which are smaller than 50 are system... You would filter this out from sp_who2 to get all the user generated sessions (not always true I have seen mirroring spids being between 51 and 70 on one my servers)

These days you would use is_user_process instead

So instead of this query

SELECT *

FROM sys.dm_exec_sessions AS es WITH (NOLOCK)

WHERE es.session_id > 50

You would use this one

SELECT *

FROM sys.dm_exec_sessions AS es WITH (NOLOCK)

WHERE es.is_user_process = 1

99.999

The five nines.. everyone knows this number... a 99.999% uptime certification means that the service or application will only be down for approximately five minutes and 15 seconds every year.

100

The default for MAXRECURSION in a recursive CTE

128

Identifier length (name of table, column etc etc)

128 is plenty, I still remember some FoxPro databases where the length could not exceed 8, then you would end up with Addrln1 etc etc

Here is a repo script that will attempt to create a table where the name is 130 characters in length

DECLARE @Ident VARCHAR(150) = REPLICATE('A', 150)

DECLARE @sql VARCHAR(500) = 'create table ' + @Ident +'(id int)'

EXEC( @sql )

And it blows up with the following error

Msg 103, Level 15, State 4, Line 1

The identifier that starts with 'AAAA.....AAA' is too long. Maximum length is 128.

300

Page Life Expectancy is 300 seconds, meaning SQL Server can only keep those pages in memory for 300 seconds after reading them. This number is quoted all over the place that indicates you have issues if you fall below that. Is the number 300 still correct? Start here https://www.sqlskills.com/blogs/paul/page-life-expectancy-isnt-what-you-think/

900

900 bytes for a clustered index. But then again if you have such a wide clustered index and several nonclustered indexes... good luck!

999

Nonclustered indexes you can have per table

I believe this number used to be 249 or 254 back in the day... but I guess it changed after that monstrosity sharepoint came into existence

1,000

Ah yes, who doesn't remember this number. It usually starts with someone saying that they don't see any job history for the job they created on the new server

Hmmm, you already know the answer don't you?

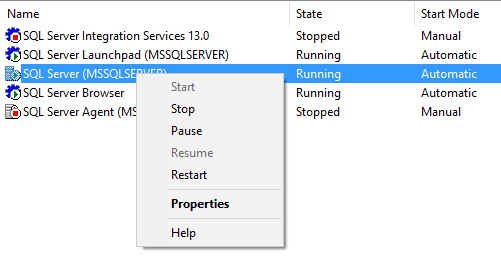

You go and open up SQL Agent-->Properties-->History

And what do you see?

Maximum job history log size: 1000

Maximum job history rows per job: 100

Ah yes..those nasty defaults

Or someone was evil and executed the proc sp_purge_jobhistory for your job :-)

1,205

Transaction (Process ID %d) was deadlocked on %.*ls resources with another process and has been chosen as the deadlock victim. Rerun the transaction.

1,222

Use this trace flag to return the resources and types of locks that are participating in a deadlock and also the current command affected

1,433

The default port SQL Server is listening on

1,700

You can have 1,700 bytes for a nonclustered index.

2,100

Parameters per stored procedure or user-defined function

Tried that one as well...

declare @d varchar(max) = 'create procedure prtest '

;with cte as (

select number from master..spt_values

where type = 'p'

union

select number + 2048 from master..spt_values

where type = 'p'

)

select top 2101 @d += '@i' + convert(varchar(10),number) + ' int ,'

from cte

select @d = left(@d, len(@d) -1) + 'as select 1 as Test'

exec(@d)

And here is the error

Msg 180, Level 15, State 1, Procedure prtest, Line 1

There are too many parameters in this CREATE PROCEDURE statement. The maximum number is 2100.

3,226

Oh your errorlog is full of messages like these?

Those are not really errors are they?

To stop logging all of your backup success entries to the error log, use traceflag 3226

3,605

Like I showed in the section for number -1, you would use traceflag 3605 alongside traceflags 1204 and 1222 to send deadlock information to the error log

4,096

Columns per SELECT statement. Really who has such a query?

Hmm, I just had to try that out

declare @d varchar(max) = 'select top 1 '

;with cte as (

select number from master..spt_values

where type = 'p'

union

select number + 2048 from master..spt_values

where type = 'p'

union all

select 4096)

select @d += 'name as [' + convert(varchar(10),number) + '] ,'

from cte

select @d = left(@d, len(@d) -1) + 'from sys.objects'

exec(@d)

(1 row(s) affected)

Msg 1056, Level 15, State 1, Line 1

The number of elements in the select list exceeds the maximum allowed number of 4096 elements.

Also 4096 is the default network packet size

4,199

Traceflag 4199 Enables query optimizer (QO) fixes released in SQL Server Cumulative Updates and Service Packs. See also the hint 'ENABLE_QUERY_OPTIMIZER_HOTFIXES' from SQL Server 2016 SP1 onwards

8,060

Bytes per page

The number of bytes a page can hold.. and also the number of bytes a row can hold (not taking into account row overflow data types)

15,000

Partitions per partitioned table or index

I think this used to be either 999 or 1000...don't remember exactly

32,767

This is database id of the ResourceDb