Try to guess without running the code which of the following 3 queries will return a value for maxvalue that is not null

Is it A, B or C?

--A

DECLARE @maxValue DATETIME

SET @maxValue = '1/1/1900'

SELECT @maxvalue = MAX(crdate)

FROM sysobjects WHERE ID = -99

SELECT 'A' ,@maxvalue maxValue

GO

--B

DECLARE @maxValue DATETIME

SET @maxValue = '1/1/1900'

SELECT TOP 1 @maxValue = crdate

FROM sysobjects WHERE ID = -99

ORDER BY crdate DESC

SELECT 'B' ,@maxvalue maxValue

GO

--C

DECLARE @maxValue DATETIME

SET @maxValue = '1/1/1900'

SET @maxvalue = (SELECT TOP 1 crdate

FROM sysobjects WHERE ID = -99

ORDER BY crdate DESC

SELECT 'C' ,@maxvalue maxValue

GO

Let me know if you were correct. I must admit I saw this code on Simon Sabin's blog

To understand why this happens read this Tony Rogerson article: T-SQL Value assignment SET vs SELECT

So what does this have to do with Halloween? Nothing really.

A blog about SQL Server, Books, Movies and life in general

Friday, October 27, 2006

One Of The Reasons I Participate In Forums And Newsgroups

This is one of the reasons I participate in SQL Server newsgroups and forums

A person had the following update statement

On the table that got updated (Object_data) the following trigger was created

I suggested that the person did not need the cursor since he is inserting everything anyway into the object_datahist table

I decided to modify the trigger as follows

I also suggested modifying the following line from the update query

and convert(varchar,injurydate,101) = convert(varchar,claim_injury_date,101)

to

and injurydate = claim_injury_date

since the dates are the same anyway

This is the response I got

"I tested removing the cursor driven trigger from the Object_Data table in development and replaced it with the set based up date you provided. Here is what happened:

20,784 records in the test.

1. Using the current trigger I canceled the update after an hour and eleven minutes of running. It had updated 10,218 rows.

2. Using the set based trigger it ran in eleven seconds and generated the correct amount of history.

That's really something to me! Thanks again!"

You see, this is one of the reasons I participate in forums/newsgroups because it feels good to help other people. Another reason is that you can look at the responses from the SQL MVP's and see how they would handle certain situations. I remember running into problems once and I also remembered that I saw this same problem in a newsgroup. after that it's a quick Google and you find your answer

A person had the following update statement

Update Object_data

set External_Claim_Number = BWCClaimNum

From yson_Claims_eDocs_10_27_2006

where LastName = INJ_lname

and FirstName = INJ_fname

and SSN = inj_ssn

and convert(varchar,injurydate,101) = convert(varchar,claim_injury_date,101)

and convert(varchar,pkclaim) = internal_claim_Number

and BWCClaimNum is not null

and BWCClaimNum <> External_Claim_Number

On the table that got updated (Object_data) the following trigger was created

SET QUOTED_IDENTIFIER ON

GO

SET ANSI_NULLS ON

GO

ALTER TRIGGER [tr_Object_Data_IU] ON [dbo].[OBJECT_DATA]

FOR UPDATE,Insert

AS

declare @rows int

declare @object_Data_ID int

set @rows = @@ROWCOUNT

if @rows = 0

return

if @rows = 1

begin

select @object_Data_Id = object_data_id

from inserted

goto singlerecord

end

declare curObject insensitive cursor for

select object_data_id

from inserted

open curObject

fetch next from curObject into @object_data_id

while (@@fetch_status <> -1)

begin

singlerecord:

insert object_datahist (OBJECT_DATA_ID,OBJECT_ID,INJ_SSN,INJ_LNAME,INJ_FNAME,INJ_SEX,EXTERNAL_CLAIM_NUMBER,

INTERNAL_CLAIM_NUMBER,CLAIM_INJURY_DATE,CLAIM_DOCUMENT_DOS,CLAIM_RISK_NUMBER,DOCUMENT_TYPE,

DOCUMENT_SUBTYPE,DOCUMENT_SUBTYPE2,EMPLOYER,BILL_NUMBER,PROVIDER_NUMBER,OTHER,GROUP_ID,GROUP_NAME,

YEAR_NUM,DateChanged,ChangedBy,Change

,PROV_FAX_NUM)

select OBJECT_DATA_ID,OBJECT_ID,INJ_SSN,INJ_LNAME,INJ_FNAME,INJ_SEX,EXTERNAL_CLAIM_NUMBER,INTERNAL_CLAIM_NUMBER,

CLAIM_INJURY_DATE,CLAIM_DOCUMENT_DOS,CLAIM_RISK_NUMBER,DOCUMENT_TYPE,DOCUMENT_SUBTYPE,

DOCUMENT_SUBTYPE2,EMPLOYER,BILL_NUMBER,PROVIDER_NUMBER,OTHER,GROUP_ID,GROUP_NAME,YEAR_NUM,

getdate(),user_name(),'U'

,PROV_FAX_NUM

from inserted where object_data_id = @object_data_id

if @rows = 1

return

fetch next from curObject into @object_data_id

end

close curObject

deallocate curObject

I suggested that the person did not need the cursor since he is inserting everything anyway into the object_datahist table

I decided to modify the trigger as follows

SET QUOTED_IDENTIFIER ON

GO

SET ANSI_NULLS ON

GO

ALTER TRIGGER [tr_Object_Data_IU] ON [dbo].[OBJECT_DATA]

FOR UPDATE,Insert

AS

IF @@ROWCOUNT =0

RETURN

insert object_datahist (OBJECT_DATA_ID,OBJECT_ID,INJ_SSN,INJ_LNAME,INJ_FNAME,INJ_SEX,EXTERNAL_CLAIM_NUMBER,

INTERNAL_CLAIM_NUMBER,CLAIM_INJURY_DATE,CLAIM_DOCUMENT_DOS,CLAIM_RISK_NUMBER,DOCUMENT_TYPE,

DOCUMENT_SUBTYPE,DOCUMENT_SUBTYPE2,EMPLOYER,BILL_NUMBER,PROVIDER_NUMBER,OTHER,GROUP_ID,GROUP_NAME,

YEAR_NUM,DateChanged,ChangedBy,Change

,PROV_FAX_NUM)

select OBJECT_DATA_ID,OBJECT_ID,INJ_SSN,INJ_LNAME,INJ_FNAME,INJ_SEX,EXTERNAL_CLAIM_NUMBER,INTERNAL_CLAIM_NUMBER,

CLAIM_INJURY_DATE,CLAIM_DOCUMENT_DOS,CLAIM_RISK_NUMBER,DOCUMENT_TYPE,DOCUMENT_SUBTYPE,

DOCUMENT_SUBTYPE2,EMPLOYER,BILL_NUMBER,PROVIDER_NUMBER,OTHER,GROUP_ID,GROUP_NAME,YEAR_NUM,

getdate(),user_name(),'U'

,PROV_FAX_NUM

from inserted

I also suggested modifying the following line from the update query

and convert(varchar,injurydate,101) = convert(varchar,claim_injury_date,101)

to

and injurydate = claim_injury_date

since the dates are the same anyway

This is the response I got

"I tested removing the cursor driven trigger from the Object_Data table in development and replaced it with the set based up date you provided. Here is what happened:

20,784 records in the test.

1. Using the current trigger I canceled the update after an hour and eleven minutes of running. It had updated 10,218 rows.

2. Using the set based trigger it ran in eleven seconds and generated the correct amount of history.

That's really something to me! Thanks again!"

You see, this is one of the reasons I participate in forums/newsgroups because it feels good to help other people. Another reason is that you can look at the responses from the SQL MVP's and see how they would handle certain situations. I remember running into problems once and I also remembered that I saw this same problem in a newsgroup. after that it's a quick Google and you find your answer

What Are You Watching On Halloween?

I have difficulty picking a movie to watch on Halloween.

Did any of you (my readers) see any of these movies below? Which movie would you pick?

Omen (2006 Edition)

The Gingerdead Man

Saw I

Saw II

Exorcist (Begining)

Seed of Chucky

Ringu

Texas Chainsaw

Silent hill

Hills have eyes

Underworld (evolutions)

Leave me a comment or send me an email by using the Contact Me link.

Did any of you (my readers) see any of these movies below? Which movie would you pick?

Omen (2006 Edition)

The Gingerdead Man

Saw I

Saw II

Exorcist (Begining)

Seed of Chucky

Ringu

Texas Chainsaw

Silent hill

Hills have eyes

Underworld (evolutions)

Leave me a comment or send me an email by using the Contact Me link.

Thursday, October 26, 2006

SQL Server experienced revenue growth of over 30%

Microsoft Corp. today announced first quarter revenue of $10.81 billion for the period ended September 30, 2006, an 11% increase over the same quarter of the prior year. Operating income for the quarter was $4.47 billion, an 11% increase compared with $4.05 billion in the prior year period. Net income and diluted earnings per share for the first quarter were $3.48 billion and $0.35 per share. For the same quarter of the previous year, net income and diluted earnings per share were $3.14 billion and $0.29, including a $0.02 per share charge for certain legal charges.

Server & Tools revenue increased 17% over the comparable quarter in the prior year, reflecting healthy performance for offerings such as SQL Server™ 2005, Windows Server®, Visual Studio® 2005 and BizTalk® Server. SQL Server experienced revenue growth of over 30%, as customers are increasingly deploying SQL Server for their mission critical, transaction-oriented databases.

Good news if you are a SQL Server developer

Server & Tools revenue increased 17% over the comparable quarter in the prior year, reflecting healthy performance for offerings such as SQL Server™ 2005, Windows Server®, Visual Studio® 2005 and BizTalk® Server. SQL Server experienced revenue growth of over 30%, as customers are increasingly deploying SQL Server for their mission critical, transaction-oriented databases.

Good news if you are a SQL Server developer

Wednesday, October 25, 2006

Enable xp_cmdshell In SQL Server 2005 With The Surface Configuration Tool Or With A Script

This question popped up in a newsgroup today. How do I enable xp_cmdshell in SQL Server 2005? Well you can do it two ways; one is with a script and the other with the Surface Configuration Tool

Let's start with the Surface Configuration Tool

Surface Configuration Tool

You have to navigate to the tool from the start button, the path is below

Programs-->Microsoft SQL server 2005-->Configuration Tools-->Surface Configuration Tool

Then select Surface Area Configuration for Features (bottom one)

Expand Database Engine go all the way down to xp_cmdshell and click enable xp_cmdshell and hit apply

SQL Script

To do it with a script use the one below

EXECUTE sp_configure 'show advanced options', 1

RECONFIGURE WITH OVERRIDE

GO

EXECUTE sp_configure 'xp_cmdshell', '1'

RECONFIGURE WITH OVERRIDE

GO

EXECUTE sp_configure 'show advanced options', 0

RECONFIGURE WITH OVERRIDE

GO

Let's start with the Surface Configuration Tool

Surface Configuration Tool

You have to navigate to the tool from the start button, the path is below

Programs-->Microsoft SQL server 2005-->Configuration Tools-->Surface Configuration Tool

Then select Surface Area Configuration for Features (bottom one)

Expand Database Engine go all the way down to xp_cmdshell and click enable xp_cmdshell and hit apply

SQL Script

To do it with a script use the one below

EXECUTE sp_configure 'show advanced options', 1

RECONFIGURE WITH OVERRIDE

GO

EXECUTE sp_configure 'xp_cmdshell', '1'

RECONFIGURE WITH OVERRIDE

GO

EXECUTE sp_configure 'show advanced options', 0

RECONFIGURE WITH OVERRIDE

GO

http://sqlservercode.blogspot.com In Top 100000 On Technorati

This blog is currently ranked 90,981 on Technorati

Rank: 90,981 (65 links from 30 blogs)

Not bad I guess, I wonder how long it will take to reach 50000?

The rank was 150000 just a couple of months ago so it's going towards the top (but will never reach it ;-( )

You can check the ranking here http://www.technorati.com/search/sqlservercode.blogspot.com and see who is linking here

Rank: 90,981 (65 links from 30 blogs)

Not bad I guess, I wonder how long it will take to reach 50000?

The rank was 150000 just a couple of months ago so it's going towards the top (but will never reach it ;-( )

You can check the ranking here http://www.technorati.com/search/sqlservercode.blogspot.com and see who is linking here

Microsoft Changing Live Search Back To MSN Search

Just saw The Island the other day and I must say I liked it a lot. I don't know why the movie didn't do as well as expected. The stars of the movie are Scarlett Johansson (Lost in Translation, Girl with a Pearl Earring) and Ewan McGregor ( Star Wars: Episode III - Revenge of the Sith , Trainspotting , Moulin Rouge!) other prominent roles are for Sean Bean, Steve Buscemi and Michael Clarke Duncan.. The movie is directed by Michael Bay who did Pearl Harbor and Armageddon. Michael Bay is currently working on The Hitcher and Transformers The links are all to IMDB in case you want more details about any of the movies.

The best part of the movie: the car chase.

The car chase is probably the best ever; it even beats the one from the second Matrix movie.

The movie takes place in the year 2050 and people have clones made as insurance; if their liver gives up then they just take the liver from the clone. These clones live isolated underground and have no idea what's going on; they think that they survived some contamination. Every now and then a lottery takes place and the winner goes to the Island. In reality the winner goes to the operating table. I won't give away the whole story you watch that yourself

There is some major product placement in this movie. The Xbox is featured in the movie alongside MSN Search; that's right not Windows Live Search. I guess they can always release a director's cut and 'fix' that. Cadillac has some top of the line car that costs half a million dollars; How much is half a million in the year 2050? To me it seems that a Porsche Carrera would cost about $500000 in about 50 years. Anyway the 'real' Ewan in the movie, who is very rich complains when his 'very' expensive car gets trashed. Would he really get that upset over the car; his clone is more expensive and he tries to kill the clone.

If you can catch the movie on HBO/On Demand then watch this movie. If you don’t want to watch the whole movie then at least watch the car scene; you won’t regret it

The best part of the movie: the car chase.

The car chase is probably the best ever; it even beats the one from the second Matrix movie.

The movie takes place in the year 2050 and people have clones made as insurance; if their liver gives up then they just take the liver from the clone. These clones live isolated underground and have no idea what's going on; they think that they survived some contamination. Every now and then a lottery takes place and the winner goes to the Island. In reality the winner goes to the operating table. I won't give away the whole story you watch that yourself

There is some major product placement in this movie. The Xbox is featured in the movie alongside MSN Search; that's right not Windows Live Search. I guess they can always release a director's cut and 'fix' that. Cadillac has some top of the line car that costs half a million dollars; How much is half a million in the year 2050? To me it seems that a Porsche Carrera would cost about $500000 in about 50 years. Anyway the 'real' Ewan in the movie, who is very rich complains when his 'very' expensive car gets trashed. Would he really get that upset over the car; his clone is more expensive and he tries to kill the clone.

If you can catch the movie on HBO/On Demand then watch this movie. If you don’t want to watch the whole movie then at least watch the car scene; you won’t regret it

Monday, October 23, 2006

Do You Know How Between Works With Dates?

Do you use between to return data that has dates? Do you know that between will get everything since midnight from the first criteria and up to midnight exactly from the second criteria. If you do BETWEEN 2006-10-01 AND 2006-10-02 then all the values that are greater or equal than 2006-10-01 and less or equal to 2006-10-02 will be returned. So no values after 2006-10-02 midnight will be returned.

Let's test this out, first let's create this table

CREATE TABLE SomeDates (DateColumn DATETIME)

Insert 2 values

INSERT INTO SomeDates VALUES('2006-10-02 00:00:00.000')

INSERT INTO SomeDates VALUES('2006-10-01 00:00:00.000')

Return everything between '2006-10-01' and '2006-10-02'

SELECT *

FROM SomeDates

WHERE DateColumn BETWEEN '20061001' AND '20061002'

ORDER BY DateColumn

This works without a problem

Let's add some more dates including the time portion

INSERT INTO SomeDates VALUES('2006-10-02 00:01:00.000')

INSERT INTO SomeDates VALUES('2006-10-02 00:00:59.000')

INSERT INTO SomeDates VALUES('2006-10-02 00:00:01.000')

INSERT INTO SomeDates VALUES('2006-10-01 00:01:00.000')

INSERT INTO SomeDates VALUES('2006-10-01 00:12:00.000')

INSERT INTO SomeDates VALUES('2006-10-01 23:00:00.000')

Return everything between '2006-10-01' and '2006-10-02'

SELECT *

FROM SomeDates

WHERE DateColumn BETWEEN '20061001' AND '20061002'

ORDER BY DateColumn

Here is where it goes wrong; for 2006-10-02 only the midnight value is returned the other ones are ignored

Now if we change 2006-10-02 to 2006-10-03 we get what we want

SELECT *

FROM SomeDates

WHERE DateColumn BETWEEN '20061001' AND '20061003'

ORDER BY DateColumn

Now insert a value for 2006-10-03 (midnight)

INSERT INTO SomeDates VALUES('2006-10-03 00:00:00.000')

Run the query again

SELECT *

FROM SomeDates

WHERE DateColumn BETWEEN '20061001' AND '20061003'

ORDER BY DateColumn

We get back 2006-10-03 00:00:00.000; between will return the date if it is exactly midnight

If you use >= and < then you get exactly what you need

SELECT *

FROM SomeDates

WHERE DateColumn >= '20061001' AND DateColumn < '20061003'

ORDER BY DateColumn

--Clean up

DROP TABLE SomeDates

So be careful when using between because you might get back rows that you did not expect to get back and it might mess up your reporting if you do counts or sums

Let's test this out, first let's create this table

CREATE TABLE SomeDates (DateColumn DATETIME)

Insert 2 values

INSERT INTO SomeDates VALUES('2006-10-02 00:00:00.000')

INSERT INTO SomeDates VALUES('2006-10-01 00:00:00.000')

Return everything between '2006-10-01' and '2006-10-02'

SELECT *

FROM SomeDates

WHERE DateColumn BETWEEN '20061001' AND '20061002'

ORDER BY DateColumn

This works without a problem

Let's add some more dates including the time portion

INSERT INTO SomeDates VALUES('2006-10-02 00:01:00.000')

INSERT INTO SomeDates VALUES('2006-10-02 00:00:59.000')

INSERT INTO SomeDates VALUES('2006-10-02 00:00:01.000')

INSERT INTO SomeDates VALUES('2006-10-01 00:01:00.000')

INSERT INTO SomeDates VALUES('2006-10-01 00:12:00.000')

INSERT INTO SomeDates VALUES('2006-10-01 23:00:00.000')

Return everything between '2006-10-01' and '2006-10-02'

SELECT *

FROM SomeDates

WHERE DateColumn BETWEEN '20061001' AND '20061002'

ORDER BY DateColumn

Here is where it goes wrong; for 2006-10-02 only the midnight value is returned the other ones are ignored

Now if we change 2006-10-02 to 2006-10-03 we get what we want

SELECT *

FROM SomeDates

WHERE DateColumn BETWEEN '20061001' AND '20061003'

ORDER BY DateColumn

Now insert a value for 2006-10-03 (midnight)

INSERT INTO SomeDates VALUES('2006-10-03 00:00:00.000')

Run the query again

SELECT *

FROM SomeDates

WHERE DateColumn BETWEEN '20061001' AND '20061003'

ORDER BY DateColumn

We get back 2006-10-03 00:00:00.000; between will return the date if it is exactly midnight

If you use >= and < then you get exactly what you need

SELECT *

FROM SomeDates

WHERE DateColumn >= '20061001' AND DateColumn < '20061003'

ORDER BY DateColumn

--Clean up

DROP TABLE SomeDates

So be careful when using between because you might get back rows that you did not expect to get back and it might mess up your reporting if you do counts or sums

Sunday, October 22, 2006

SQL Server 2005 Practical Troubleshooting: The Database Engine Sample Chapter Available

Ken Henderson has posted a sample chapter from the forthcoming book SQL Server 2005 Practical Troubleshooting: The Database Engine on his blog. The sample chapter is: Chapter 4 Procedure Cache Issues. This is a must read for anyone who is troubleshooting SQL Server queries and procedures. Some of the things covered are: Cached Object Leaks, Cursor Leaks, Parameter Sniffing, Excessive Compilation, Poor Plan Reuse and How Cache Lookups Work. I think that this is the first time I have seen parameter sniffing described in a book. Check it out and let me know what you think.

SQLQueryStress Beta 1 Available For Download

Adam Machanic has made available beta 1 of the SQLQueryStress tool. It provides some support for query parameterization and options for collection of I/O and CPU metrics. It is not intended to replace tools such as Visual Studio Team System's load tests, but rather to be a simple and easy-to-use tool in the DBA or database developer's kit.

To download the tool and read the documentation go here: http://www.datamanipulation.net/SQLQueryStress/

To download the tool and read the documentation go here: http://www.datamanipulation.net/SQLQueryStress/

Wednesday, October 18, 2006

Visual Studio Team Edition for Database Professionals CTP 6 Is Available For Download

Gert Drapers has announced that CTP6 of Visual Studio Team Edition for Database Professionals is available for download

This is an overview of the highlights in CTP6

This is an overview of the highlights in CTP6

- Full support for SQL Server 2000 & 2005 objects, the parser work has been completed

- Extended Properties support, we know import and deploy all your extended properties

- Inline constraint support, if you do not want to separate them out, we allow them inline as well

- Pre- and post deployment scripts population during Import Script

- Full support for command line build & deploy and Team Build

- A new Import Schema Wizard which is also integrated with the New Project Wizard to make project creation and import a single stop shop

- Synchronize your database project from Schema Compare, compare your project with a database and pull the differences in to the database project

- Schema refactoring is now allowed even if you have files in a warning state

- Resolve 3 and 4 part name usage when the referenced database is locally present, same for linked servers

- The product no longer installs SQL Express; you can pick your own SQL Server 2005 Developer Edition or SQL Server 2005 Enterprise Edition instance on the local box. When you first start the product for the first time we will ask you to choose an local instance to use

- Display detailed Schema Object properties in the VS Property Window for selected objects in the Schema View

- Separation of user target database settings through user project files, this allows users to work against a different target instance without changing the main project file.

- We made great progress on the overall stability and performance of the product across the board, project creation, importing your schema, reloading project and making changes to your schema

- And last but not least we fixed many reported customer problems!

Visit The Data Dude blog for more info

Monday, October 16, 2006

SQL Server Database Publishing Wizard CTP 1 released

SQL Server Hosting Toolkit Released

The goal of the SQL Server Hosting Toolkit is to enable a great experience around SQL Server in shared hosting environments.

The toolkit will eventually consist of a suite of tools and services that hosters can deploy for use by their customers. It will also serve as an incubation vehicle for tools that hosting customers can download and use directly, regardless of whether their hoster has deployed the toolkit

Database Publishing Wizard Community Technology Preview 1

The Database Publishing Wizard enables the deployment of SQL Server 2005 databases into a hosted environment on either a SQL Server 2000 or 2005 server. It generates a SQL script file which can be used to recreate the database in shared hosting environments where the only connectivity to a server is through a web-based control panel with a scripting window.

The goal of the SQL Server Hosting Toolkit is to enable a great experience around SQL Server in shared hosting environments.

The toolkit will eventually consist of a suite of tools and services that hosters can deploy for use by their customers. It will also serve as an incubation vehicle for tools that hosting customers can download and use directly, regardless of whether their hoster has deployed the toolkit

Database Publishing Wizard Community Technology Preview 1

The Database Publishing Wizard enables the deployment of SQL Server 2005 databases into a hosted environment on either a SQL Server 2000 or 2005 server. It generates a SQL script file which can be used to recreate the database in shared hosting environments where the only connectivity to a server is through a web-based control panel with a scripting window.

Scrum

I am having Scrum training tomorrow. So what is Scrum anyway?

Scrum is an agile method for project management. Scrum was named as a project management style in auto and consumer product manufacturing companies by Takeuchi and Nonaka in "The New New Product Development Game" (Harvard Business Review, Jan-Feb 1986). They noted that projects using small, cross-functional teams historically produce the best results, and likened these high-performing teams to the scrum formation in Rugby. Jeff Sutherland, John Scumniotales, and Jeff McKenna documented, conceived and implemented Scrum as it is described below at Easel Corporation in 1993, incorporating team management styles noted by Takeuchi and Nonaka. In 1995, Ken Schwaber formalized the definition of Scrum and helped deploy it worldwide in software development.

Its intended use is for management of software development projects, and it has been successfully used to "wrap" Extreme Programming and other development methodologies. However, it can theoretically be applied to any context where a group of people need to work together to achieve a common goal - such as setting up a small school, scientific research projects or planning a wedding.

Although Scrum was intended to be for management of software development projects, it can be used in running maintenance teams, or as a program management approach: Scrum of Scrums.

Characteristics of Scrum

A living backlog of prioritized work to be done;

Completion of a largely fixed set of backlog items in a series of short iterations or sprints;

A brief daily meeting or scrum, at which progress is explained, upcoming work is described and impediments are raised.

A brief planning session in which the backlog items for the sprint will be defined.

A brief heartbeat retrospective, at which all team members reflect about the past sprint.

Scrum is facilitated by a ScrumMaster, whose primary job is to remove impediments to the ability of the team to deliver the sprint goal. The ScrumMaster is not the leader of the team (as they are self-organising) but acts as a productivity buffer between the team and any destabilising influences.

Scrum enables the creation of self-organising teams by encouraging verbal communication across all team members and across all disciplines that are involved in the project.

A key principle of Scrum is its recognition that fundamentally empirical challenges cannot be addressed successfully in a traditional "process control" manner. As such, Scrum adopts an empirical approach - accepting that the problem cannot be fully understood or defined, focusing instead on maximizing the team's ability to respond in an agile manner to emerging challenges.

Notably missing from Scrum is the "cookbook" approach to project management exemplified in the Project Management Body of Knowledge or Prince2 - both of which have as their goal quality through application of a series of prescribed processes.

This is what the training will cover tomorrow

Creating An Agile Environment (An introduction to Scrum)

Presentation Outline:

I. Definition of Agile

II. Agile Principles and Values

III. Historical Background in Software Development

IV. Overview of Scrum

V. Roles and Responsibilities

VI. Benefits and Challenges in using Scrum

VII. Agile adoption patterns for large organizations

VIII. Questions and Recommended Reading

What is Scrum? Scrum is an iterative, incremental process for developing any product or managing any work. It produces a potentially shippable set of functionality at the end of every iteration. It's attributes are:

So my question to you is, do you use Scrum? And if you do then did it improve the process of development? We do use it actually but the developers did not get training yet.

Scrum is an agile method for project management. Scrum was named as a project management style in auto and consumer product manufacturing companies by Takeuchi and Nonaka in "The New New Product Development Game" (Harvard Business Review, Jan-Feb 1986). They noted that projects using small, cross-functional teams historically produce the best results, and likened these high-performing teams to the scrum formation in Rugby. Jeff Sutherland, John Scumniotales, and Jeff McKenna documented, conceived and implemented Scrum as it is described below at Easel Corporation in 1993, incorporating team management styles noted by Takeuchi and Nonaka. In 1995, Ken Schwaber formalized the definition of Scrum and helped deploy it worldwide in software development.

Its intended use is for management of software development projects, and it has been successfully used to "wrap" Extreme Programming and other development methodologies. However, it can theoretically be applied to any context where a group of people need to work together to achieve a common goal - such as setting up a small school, scientific research projects or planning a wedding.

Although Scrum was intended to be for management of software development projects, it can be used in running maintenance teams, or as a program management approach: Scrum of Scrums.

Characteristics of Scrum

A living backlog of prioritized work to be done;

Completion of a largely fixed set of backlog items in a series of short iterations or sprints;

A brief daily meeting or scrum, at which progress is explained, upcoming work is described and impediments are raised.

A brief planning session in which the backlog items for the sprint will be defined.

A brief heartbeat retrospective, at which all team members reflect about the past sprint.

Scrum is facilitated by a ScrumMaster, whose primary job is to remove impediments to the ability of the team to deliver the sprint goal. The ScrumMaster is not the leader of the team (as they are self-organising) but acts as a productivity buffer between the team and any destabilising influences.

Scrum enables the creation of self-organising teams by encouraging verbal communication across all team members and across all disciplines that are involved in the project.

A key principle of Scrum is its recognition that fundamentally empirical challenges cannot be addressed successfully in a traditional "process control" manner. As such, Scrum adopts an empirical approach - accepting that the problem cannot be fully understood or defined, focusing instead on maximizing the team's ability to respond in an agile manner to emerging challenges.

Notably missing from Scrum is the "cookbook" approach to project management exemplified in the Project Management Body of Knowledge or Prince2 - both of which have as their goal quality through application of a series of prescribed processes.

This is what the training will cover tomorrow

Creating An Agile Environment (An introduction to Scrum)

Presentation Outline:

I. Definition of Agile

II. Agile Principles and Values

III. Historical Background in Software Development

IV. Overview of Scrum

V. Roles and Responsibilities

VI. Benefits and Challenges in using Scrum

VII. Agile adoption patterns for large organizations

VIII. Questions and Recommended Reading

What is Scrum? Scrum is an iterative, incremental process for developing any product or managing any work. It produces a potentially shippable set of functionality at the end of every iteration. It's attributes are:

- Scrum is an agile process to manage and control development work.

- Scrum is a wrapper for existing engineering practices.

- Scrum is a team-based approach to iteratively, incrementally develop systems and products when requirements are rapidly changing

- Scrum is a process that controls the chaos of conflicting interests and needs.

- Scrum is a way to improve communications and maximize co-operation.

- Scrum is a way to detect and cause the removal of anything that gets in the way of developing and delivering products.

- Scrum is scalable from single projects to entire organizations. Scrum has controlled and organized development and implementation for multiple interrelated products and projects with over a thousand developers and implementers.

- Scrum is a way for everyone to feel good about their job, their contributions, and that they have done the very best they possibly could.

So my question to you is, do you use Scrum? And if you do then did it improve the process of development? We do use it actually but the developers did not get training yet.

Hello OUTPUT See You Later Trigger Or Perhaps Not?

SQL Server 2005 has added an optional OUTPUT clause to UPDATE, INSERT and DELETE commands, this enables you to accomplish almost the same task as if you would have used a after trigger in SQL Server 2000

Let's take a closer look, let's start with the syntax

The syntax for an insert is like this

INSERT INTO TableNAme

OUTPUT Inserted

VALUES (....)

The syntax for an update is like this

UPDATE TableNAme

SET ......

OUTPUT Deleted,Inserted

WHERE ....

The syntax for a delete is like this

DELETE TableName

OUTPUT Deleted

WHERE ....

So the OUTPUT comes right before the WHERE or the VALUES part of the statement

Also as you can see Insert has Inserted as part of the OUTPUT Clause, Update has Inserted and Deleted and Delete has Deleted as part of the OUTPUT Clause, this is very simmilar to the inserted and deleted pseudo-tables in triggers

Let's test it out, first we have to create a table

CREATE TABLE TestOutput

(

ID INT NOT NULL,

Description VARCHAR(50) NOT NULL,

)

INSERT INTO TestOutput (ID, Description) VALUES (1, 'Desc1')

INSERT INTO TestOutput (ID, Description) VALUES (2, 'Desc2')

INSERT INTO TestOutput (ID, Description) VALUES (3, 'Desc3')

Let's show what we just inserted

INSERT INTO TestOutput (ID, Description)

OUTPUT Inserted.ID AS ID,Inserted.Description AS Description

VALUES (4, 'Desc4')

The * wildcard works also in this case

INSERT INTO TestOutput (ID, Description)

OUTPUT Inserted.*

VALUES (5, 'Desc5')

Let's try it with a delete statement

DELETE TestOutput

OUTPUT Deleted.*

WHERE ID = 4

Let's try to use Inserted here

DELETE TestOutput

OUTPUT Inserted.*

WHERE ID = 4

The message that is returned is this

Server: Msg 107, Level 15, State 1, Line 1

The column prefix 'Inserted' does not match with a table name or alias name used in the query.

So as you can see Inserted can not be used with a DELETE command

Let's try using deleted with an insert command

INSERT INTO TestOutput (ID, Description)

OUTPUT Deleted.*

VALUES (5, 'Desc5')

Same story, Deleted can not be used with an INSERT command

Server: Msg 107, Level 15, State 1, Line 1

The column prefix 'Deleted' does not match with a table name or alias name used in the query.

Let's take a look at the update statement

UPDATE TestOutput

SET ID = 4

OUTPUT DELETED.ID AS OldId, DELETED.Description AS oldDescription ,

Inserted.ID AS New_ID , Inserted.Description AS Newdescription

WHERE ID = 5

Create a table where we will insert our output results into

CREATE TABLE #hist (OldID INT,OldDesc VARCHAR(50),

New_ID INT,NewDesc VARCHAR(50),UpdatedTime DATETIME)

Let's insert the old and new values into a temp table (audit trail..kind of)

UPDATE TestOutput

SET ID = 666

OUTPUT DELETED.ID AS OldId, DELETED.Description AS oldDescription ,

Inserted.ID AS New_ID , Inserted.Description AS Newdescription,GETDATE() INTO #hist

WHERE ID = 3

let's see what we inserted

SELECT * FROM #hist

Remember this message?

Server: Msg 512, Level 16, State 1, Line 2

Subquery returned more than 1 value. This is not permitted when the subquery follows =, !=, <, <= , >, >= or when the subquery is used as an expression.

You would get this in a trigger when a trigger would fire after a multi row statement and you tried to assign a value to a variable. Since you can't assign values to variable with the OUTPUT clause you won't have this problem

How does identity work with the output clause?

CREATE TABLE TestOutput2

(

ID INT NOT NULL IDENTITY,

Description VARCHAR(50) NOT NULL,

)

INSERT INTO TestOutput2 (Description)

OUTPUT Inserted.*

SELECT 'Desc1' UNION ALL

SELECT 'Desc2'

We can not use SCOPE_IDENTITY() or @@IDENTITY, the value for those will both be 2, run the following example to see this

INSERT INTO TestOutput2 (Description)

OUTPUT SCOPE_IDENTITY(),@@IDENTITY,Inserted.*

SELECT 'Desc3' UNION ALL

SELECT 'Desc4'

The bottom line.

The OUTPUT clause is very useful but I don't think it's a replacement for triggers. A trigger can protect your table for any user (unless you execute a alter table..disable trigger statement)

If you use OUTPUT exclusively, then if someone uses a tool like Enterprise Manager to insert a row into your table there is nothing to fire if you want to create an audit trail for example

So it has it's uses but won't replace the trigger

Let's take a closer look, let's start with the syntax

The syntax for an insert is like this

INSERT INTO TableNAme

OUTPUT Inserted

VALUES (....)

The syntax for an update is like this

UPDATE TableNAme

SET ......

OUTPUT Deleted,Inserted

WHERE ....

The syntax for a delete is like this

DELETE TableName

OUTPUT Deleted

WHERE ....

So the OUTPUT comes right before the WHERE or the VALUES part of the statement

Also as you can see Insert has Inserted as part of the OUTPUT Clause, Update has Inserted and Deleted and Delete has Deleted as part of the OUTPUT Clause, this is very simmilar to the inserted and deleted pseudo-tables in triggers

Let's test it out, first we have to create a table

CREATE TABLE TestOutput

(

ID INT NOT NULL,

Description VARCHAR(50) NOT NULL,

)

INSERT INTO TestOutput (ID, Description) VALUES (1, 'Desc1')

INSERT INTO TestOutput (ID, Description) VALUES (2, 'Desc2')

INSERT INTO TestOutput (ID, Description) VALUES (3, 'Desc3')

Let's show what we just inserted

INSERT INTO TestOutput (ID, Description)

OUTPUT Inserted.ID AS ID,Inserted.Description AS Description

VALUES (4, 'Desc4')

The * wildcard works also in this case

INSERT INTO TestOutput (ID, Description)

OUTPUT Inserted.*

VALUES (5, 'Desc5')

Let's try it with a delete statement

DELETE TestOutput

OUTPUT Deleted.*

WHERE ID = 4

Let's try to use Inserted here

DELETE TestOutput

OUTPUT Inserted.*

WHERE ID = 4

The message that is returned is this

Server: Msg 107, Level 15, State 1, Line 1

The column prefix 'Inserted' does not match with a table name or alias name used in the query.

So as you can see Inserted can not be used with a DELETE command

Let's try using deleted with an insert command

INSERT INTO TestOutput (ID, Description)

OUTPUT Deleted.*

VALUES (5, 'Desc5')

Same story, Deleted can not be used with an INSERT command

Server: Msg 107, Level 15, State 1, Line 1

The column prefix 'Deleted' does not match with a table name or alias name used in the query.

Let's take a look at the update statement

UPDATE TestOutput

SET ID = 4

OUTPUT DELETED.ID AS OldId, DELETED.Description AS oldDescription ,

Inserted.ID AS New_ID , Inserted.Description AS Newdescription

WHERE ID = 5

Create a table where we will insert our output results into

CREATE TABLE #hist (OldID INT,OldDesc VARCHAR(50),

New_ID INT,NewDesc VARCHAR(50),UpdatedTime DATETIME)

Let's insert the old and new values into a temp table (audit trail..kind of)

UPDATE TestOutput

SET ID = 666

OUTPUT DELETED.ID AS OldId, DELETED.Description AS oldDescription ,

Inserted.ID AS New_ID , Inserted.Description AS Newdescription,GETDATE() INTO #hist

WHERE ID = 3

let's see what we inserted

SELECT * FROM #hist

Remember this message?

Server: Msg 512, Level 16, State 1, Line 2

Subquery returned more than 1 value. This is not permitted when the subquery follows =, !=, <, <= , >, >= or when the subquery is used as an expression.

You would get this in a trigger when a trigger would fire after a multi row statement and you tried to assign a value to a variable. Since you can't assign values to variable with the OUTPUT clause you won't have this problem

How does identity work with the output clause?

CREATE TABLE TestOutput2

(

ID INT NOT NULL IDENTITY,

Description VARCHAR(50) NOT NULL,

)

INSERT INTO TestOutput2 (Description)

OUTPUT Inserted.*

SELECT 'Desc1' UNION ALL

SELECT 'Desc2'

We can not use SCOPE_IDENTITY() or @@IDENTITY, the value for those will both be 2, run the following example to see this

INSERT INTO TestOutput2 (Description)

OUTPUT SCOPE_IDENTITY(),@@IDENTITY,Inserted.*

SELECT 'Desc3' UNION ALL

SELECT 'Desc4'

The bottom line.

The OUTPUT clause is very useful but I don't think it's a replacement for triggers. A trigger can protect your table for any user (unless you execute a alter table..disable trigger statement)

If you use OUTPUT exclusively, then if someone uses a tool like Enterprise Manager to insert a row into your table there is nothing to fire if you want to create an audit trail for example

So it has it's uses but won't replace the trigger

Sunday, October 15, 2006

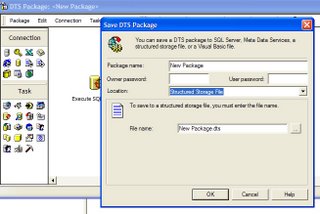

Saving A DTS Package On Another Server

How do I save a DTS package on another server? I have answered this question so many times in the past year or so that I decided to write a little post about it.

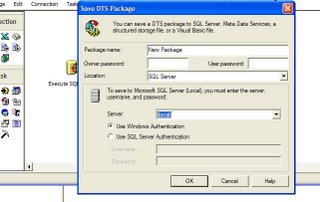

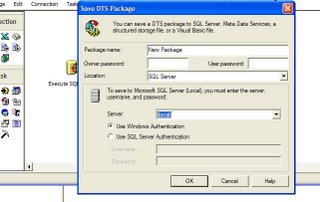

First way: Just save it on the other server

From the location dropdown select SQL server, enter the IP or sever name and supply the credentials and you are done. click on the image below this text to see a larger image

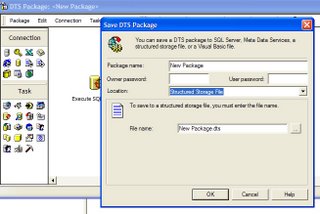

Second way: Save the package as a Structured Storage File

Most of the times you won't be able to save the file directly on the destination server. The server might be in a DMZ and behind a couple of firewalls. In that case you can save the package as a Structured Storage File. From the dropdown select Structured Storage File (see picture below) Give the file a name and save it in a folder. You probably won't be able to save the file on the destination server, save the file on your own machine or on a machine which the destination server can access.

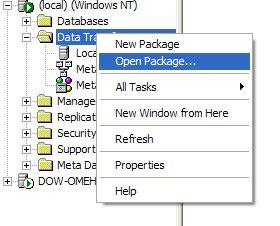

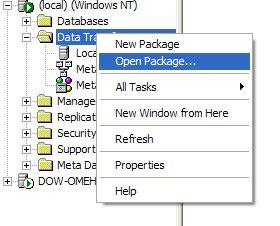

Then to open the package on the other server do this:

Right click on the Data Transformation Services folder, select open packages and navigate to the location where the Structured Storage File has been saved (see picture below)

And that is it.

First way: Just save it on the other server

From the location dropdown select SQL server, enter the IP or sever name and supply the credentials and you are done. click on the image below this text to see a larger image

Second way: Save the package as a Structured Storage File

Most of the times you won't be able to save the file directly on the destination server. The server might be in a DMZ and behind a couple of firewalls. In that case you can save the package as a Structured Storage File. From the dropdown select Structured Storage File (see picture below) Give the file a name and save it in a folder. You probably won't be able to save the file on the destination server, save the file on your own machine or on a machine which the destination server can access.

Then to open the package on the other server do this:

Right click on the Data Transformation Services folder, select open packages and navigate to the location where the Structured Storage File has been saved (see picture below)

And that is it.

Saturday, October 14, 2006

Datamining Speech In SQL Server 2005

I decided to capture everything that my 2 and a half year old son says, store it in a SQL server 2005 database and run some statistics

This is the outcome in order of most words used

NO

Watch

Movie

Barney

Blues Clues

Dada

Mama

Hungry

Cookies

Chocolate

Icecream

As you can see dada is higher than mama (by a count of one)

So this is interesting, as a child approaches the terrible two’s the most used word is no. The second interesting thing is that watch was used 6300 times and Barney, Movie and Blues Clues 2100 times each. It looks like my son likes his entertainment evenly split. And of course junk food is also high on the list, broccoli is not even in the top 100

So how did I do this? Well I used this tool called Komodo Unnatural Speaking. This is a chip embedded in a sticker. The chip captures all speech and because it’s a RFID tag whenever my son passes a certain spot in the house the data is downloaded to my computer and the chip is cleared. A SSIS package uses Fuzzy Logic to process the data (a toddler’s speech is sometimes gibberish). We get rid of noise words and the results are stored in a table.

Pretty interesting don't you think? ;-)

This is the outcome in order of most words used

NO

Watch

Movie

Barney

Blues Clues

Dada

Mama

Hungry

Cookies

Chocolate

Icecream

As you can see dada is higher than mama (by a count of one)

So this is interesting, as a child approaches the terrible two’s the most used word is no. The second interesting thing is that watch was used 6300 times and Barney, Movie and Blues Clues 2100 times each. It looks like my son likes his entertainment evenly split. And of course junk food is also high on the list, broccoli is not even in the top 100

So how did I do this? Well I used this tool called Komodo Unnatural Speaking. This is a chip embedded in a sticker. The chip captures all speech and because it’s a RFID tag whenever my son passes a certain spot in the house the data is downloaded to my computer and the chip is cleared. A SSIS package uses Fuzzy Logic to process the data (a toddler’s speech is sometimes gibberish). We get rid of noise words and the results are stored in a table.

Pretty interesting don't you think? ;-)

Friday, October 13, 2006

SQL Teaser/Puzzle Inspired By Hugo Kornelis

Hugo Kornelis has posted an interesting article about using STUFF

The link to that article is here

He has a challenge in that article, the challenge is this:

Create this table

CREATE TABLE BadData (FullName varchar(20) NOT NULL);

INSERT INTO BadData (FullName)

SELECT 'Clinton, Bill' UNION ALL

SELECT 'Johnson, Lyndon, B.' UNION ALL

SELECT 'Bush, George, H.W.';

Split the names into 3 columns (not fields ;-) ), the shortest query wins

Your output should be this:

LastName FirstName MiddleInitial

Clinton Bill

Johnson Lyndon B.

Bush George H.W.

Hugo Kornelis posted a solution by using STUFF

SELECT FullName,LEFT(FullName, CHARINDEX(', ', FullName) - 1) AS LastName,

STUFF(LEFT(FullName, CHARINDEX(', ', FullName + ', ',CHARINDEX(', ', FullName) + 2) - 1), 1, CHARINDEX(', ', FullName) + 1, '') AS FirstName,

STUFF(FullName, 1,CHARINDEX(', ', FullName + ', ',

CHARINDEX(', ', FullName) + 2), '') AS MiddleInitial

FROM BadData

which according to editplus is 340 characters

I replied with this

SELECT FullName,PARSENAME(FullName2,NameLen+1) AS LastName,

PARSENAME(FullName2,NameLen) AS FirstName,

COALESCE(REPLACE(PARSENAME(FullName2,NameLen-1),'~','.'),'') AS MiddleInitial

FROM(

SELECT LEN(FullName) -LEN(REPLACE(FullName,',','')) AS NameLen,

REPLACE(REPLACE(FullName,'.','~'),', ','.') AS FullName2,FullName

FROM BadData) x

my solution is 338 characters, so 2 characters less ;-)

Of course I could have 'cheated' a little by doing this

SELECT FullName,PARSENAME(F,NameLen+1) AS LastName,

PARSENAME(F,NameLen) AS FirstName,

COALESCE(REPLACE(PARSENAME(F,NameLen-1),'~','.'),'') AS MiddleInitial

FROM(

SELECT LEN(FullName) -LEN(REPLACE(FullName,',','')) AS NameLen,

REPLACE(REPLACE(FullName,'.','~'),', ','.') AS F,FullName

FROM BadData) x

And this is 306 characters

So let's see, who can do it in less characters

The link to that article is here

He has a challenge in that article, the challenge is this:

Create this table

CREATE TABLE BadData (FullName varchar(20) NOT NULL);

INSERT INTO BadData (FullName)

SELECT 'Clinton, Bill' UNION ALL

SELECT 'Johnson, Lyndon, B.' UNION ALL

SELECT 'Bush, George, H.W.';

Split the names into 3 columns (not fields ;-) ), the shortest query wins

Your output should be this:

LastName FirstName MiddleInitial

Clinton Bill

Johnson Lyndon B.

Bush George H.W.

Hugo Kornelis posted a solution by using STUFF

SELECT FullName,LEFT(FullName, CHARINDEX(', ', FullName) - 1) AS LastName,

STUFF(LEFT(FullName, CHARINDEX(', ', FullName + ', ',CHARINDEX(', ', FullName) + 2) - 1), 1, CHARINDEX(', ', FullName) + 1, '') AS FirstName,

STUFF(FullName, 1,CHARINDEX(', ', FullName + ', ',

CHARINDEX(', ', FullName) + 2), '') AS MiddleInitial

FROM BadData

which according to editplus is 340 characters

I replied with this

SELECT FullName,PARSENAME(FullName2,NameLen+1) AS LastName,

PARSENAME(FullName2,NameLen) AS FirstName,

COALESCE(REPLACE(PARSENAME(FullName2,NameLen-1),'~','.'),'') AS MiddleInitial

FROM(

SELECT LEN(FullName) -LEN(REPLACE(FullName,',','')) AS NameLen,

REPLACE(REPLACE(FullName,'.','~'),', ','.') AS FullName2,FullName

FROM BadData) x

my solution is 338 characters, so 2 characters less ;-)

Of course I could have 'cheated' a little by doing this

SELECT FullName,PARSENAME(F,NameLen+1) AS LastName,

PARSENAME(F,NameLen) AS FirstName,

COALESCE(REPLACE(PARSENAME(F,NameLen-1),'~','.'),'') AS MiddleInitial

FROM(

SELECT LEN(FullName) -LEN(REPLACE(FullName,',','')) AS NameLen,

REPLACE(REPLACE(FullName,'.','~'),', ','.') AS F,FullName

FROM BadData) x

And this is 306 characters

So let's see, who can do it in less characters

Tuesday, October 10, 2006

An Interview With Ken Henderson About The Forthcoming Book SQL Server 2005 Practical Troubleshooting: The Database Engine

I am a big fan of Ken Henderson’s books, I believe that every SQL Server developer should have a copy of his books. When I noticed on Amazon.com that the book SQL Server 2005 Practical Troubleshooting: The Database Engine which listed Ken Henderson as its editor would be coming out soon I got very excited. I decided to send Ken an email to see if he would be willing to answer some questions I had about the book. To my surprise Ken was more than willing to accommodate my request.

I am a big fan of Ken Henderson’s books, I believe that every SQL Server developer should have a copy of his books. When I noticed on Amazon.com that the book SQL Server 2005 Practical Troubleshooting: The Database Engine which listed Ken Henderson as its editor would be coming out soon I got very excited. I decided to send Ken an email to see if he would be willing to answer some questions I had about the book. To my surprise Ken was more than willing to accommodate my request.The question-and-answer session with Ken that follows was conducted via email.

Denis: What is the audience for this book, is it the enterprise user or can a small department benefit from the tips in this book?

Ken: Both types of users would benefit. Anyone who’s ever had a problem with SQL Server has probably noticed how few resources there are out there for troubleshooting SQL Server issues. There are plenty of resources that talk about how it works. There are many that discuss how to write code for it. But there are scant few that talk about what to do when something goes wrong. This book is intended for that audience. It is also intended for those who want to better understand how the product works and to be prepared in the event that something does go wrong with it. SQL Server doesn’t break often, but, when it does, this book will help you deal with it.

Denis: For a customer who has a performance problem that is not hardware related, what would you say are the most important chapters in this book (in order of importance)?

Ken: The Query Processor Issues chapter and the Procedure Cache Issues chapter are the two best for this type of problem.

Denis: Seven developers from the SQL Server development team and three support professionals from Microsoft Customer Support Services wrote this book. What took so long to write a book like this, and why wasn’t there a SQL Server 2000 version? Is it because SQL Server has truly grown to be a major player in the enterprise market and there is a definitive need for a book like this now?

Ken: The book took so long because all of the authors are first-time authors, and they are very busy people. There was no SQL Server 2000 version because I was too busy with my own projects to begin this project back then. SQL Server is indeed a major player in the enterprise, but I believe it has been since SQL Server 7.0. That particular aspect had nothing to do with the timing of this book.

Denis: I noticed you are listed as editor of this book. Have you written any chapters in this book?

Ken: No, I did not write any of the chapters of the book. I also tried to preserve each author’s writing style. Each chapter includes its own byline, and I have edited them as little as possible. Because some of the authors were more capable than others, I necessarily had to be involved to varying degrees with each chapter. Some chapters needed lots of editing; some very little. I think each individual perspective represented in the book is a valuable one, but I also think they speak in unison on the important points of practical troubleshooting with SQL Server.

Denis: What new technologies in SQL Server 2005 do you think are the most beneficial for performance?

Ken: There are really too many to list. A few that come to mind at the moment: instant file growth, improved wildcard support, more sophisticated cache management, improved scaling on big hardware, the XML data type and richer XML support in general, CLR integration, etc.

Denis: This book as I understand has a lot of internals information from the people who either wrote the product or have supported it that currently is not available anywhere else--is that right?

Ken: Yes, the majority of the authors were actually developers on the product. A few were support engineers who supported the product. All have had full access to the SQL Server source code for many years.

Denis: What will a person who reads this book gain in terms of understanding how to performance tune a server?

Ken: They will better understand both how the server works and also how to recognize and troubleshoot common performance problems.

Denis: Is the book geared towards a beginner/intermediate level user or do you have to be an advanced user to really utilize the information in this book?

Ken: There is something in this book for everyone. I’d like to think that beginners, intermediates, and advanced users alike would benefit from reading it.

Denis: What are the most important things a person can do to master SQL Server?

Ken: Naturally, the best thing a person could do would be to do what the authors of this book did: study the SQL Server source code. Studying the SQL Server source gives you insight into how the product works that is impossible to gain through any other means. But, given that that excludes pretty much everyone outside of Microsoft, here are some general thoughts:

#1, understand how Windows works at a very low level and how SQL Server utilizes the many facilities it offers

#2, understand how the product was designed and how it was intended to be used

#3, explore it not only as a user, but as a developer. Fire up a debugger and see how it works under the hood

#4, build real apps with it, using its various components as they were intended to be used

Denis: What are the most important things a person can do to master Transact-SQL?

Ken: My initial thought is that, again, studying the SQL Server source code is the shortest path to the deepest understanding of the language. That said, here are some general thoughts in no particular order:

#1, understand how SQL Server works. Understand the intricacies of performance tuning on SQL Server. Know how data is stored. Understand memory management and scheduling at a very low level. Understand logging and tempdb semantics. Remember that SQL Server is just an application. It’s not magical and can be misused and abused just like any other app

#2, learn the syntax and semantics of the language inside-out. Get a feel for its strengths and weaknesses, and code to those strengths. Always lean toward writing set-oriented code when you can

#3, study solutions to hard problems that are available in various forms, and apply the techniques you learn to solve your own problems

#4, learn other SQL dialects so that you can become familiar with their unique attributes and understand how T-SQL compares with them. Gain an understanding of where T-SQL fits in the general taxonomy of SQL dialects

#5, learn other languages besides SQL. If your favorite programming language is T-SQL, you probably don’t know many languages. Learn C#, VB, Perl, Ruby, C++, or any others you can work into your research so that you can better understand software engineering as a discipline and so that you can more clearly see T-SQL’s strengths and weaknesses when compared with those other languages. Try to see where you might apply techniques from those other languages to solve problems you encounter in T-SQL. Familiarize yourself with what a design pattern is, what an idiom is, what refactoring is, and apply these concepts in T-SQL just as you would in any other “real” language

#6, understand the various SQL Server components and other technologies so that you can accurately ascertain when it’s appropriate to use T-SQL. It’s not the solution for every problem. As the old saying goes, “When all you have is a hammer, everything starts to look like a nail.” By broadening your knowledge of the tools and problem solutions available to you, you’ll be more likely to choose the best one when deciding how to design a piece of software or solve a particular problem. T-SQL may turn out not to be the best way to go in a given situation

And I will end with 9 questions for Ken not related to this book

Denis: What SQL Server books are on your bookshelf?

Ken: I have Celko’s books, Darren Green’s book, and a few others. Unfortunately, I don’t have time to read as much as I’d like. I spend most of my time either writing code for the product or studying code written by others on the SQL Server development team. The majority of my research into how SQL Server works happens via studying its source code directly.

Denis: Why do you write technical books?

Ken: I write technical books because I enjoy passing on what I’ve learned as a developer. That’s different from enjoying teaching people. I do enjoy teaching people, but that’s not why I write books. Some of the things I’ve learned about SQL Server took me years to master. I enjoy passing that on to people so that they don’t have to travel the same arduous roads that I did. I enjoy helping people. That’s different from teaching for the sake of teaching. I could never train people for a living. I am a programmer by trade, and everything else is an offshoot of that.

If I didn’t think I had something unique to bring to the discussion, I don’t think I’d write books. I don’t ever want to do what has already been done. I want to bring a fresh perspective to technical books, and I want to explore things in ways most other authors wouldn’t. If my work was exactly like everyone else’s, there’d be no reason for it to exist, and I wouldn’t bother. Given that I’ve never written fulltime but have always held down a regular day job while writing my books, the work itself is simply too hard to do just to be a clone of someone else. When people pick up one of my books, I hope they know right away that it’s one of mine, that it speaks with a distinctive voice, and I hope they think they might learn something from it simply because they trust me as an author.

Denis: Why did you join Microsoft?

Ken: I joined Microsoft to get inside SQL Server. I felt that the only way to go beyond the books and whitepapers currently out there on SQL Server was to see the source code for myself, and the only way that was going to happen is if I joined the company. I wanted to approach the exploration of SQL Server from a career developer’s standpoint, something that I had not seen done before. Most SQL Server books were written by professional trainers and former DBAs. As a career developer, I thought I could bring a fresh perspective to the coverage of SQL Server, and I felt the only way to really do that was to “go live with the natives” for a few years.

Denis: Who are your favorite authors?

Ken: Mark Twain, Kurt Vonnegut, Bart D. Erhman, Robert Price, Dean Koontz, Stephen King, Joe Celko, Sam Harris, Richard Carrier, Don Box, David Solomon, Charles Petzold, Kent Beck, Martin Fowler, Bruce Eckel, and many others.

Denis: Who do you consider your rival authors?

Ken: I don’t really think of anyone else out there as a rival. When I write a book, I mainly measure my work against my concept of the perfect book. I write for me. There’s a great book out there titled On Writing Well where the author, William Zinsser, repeats the old truism that quality is its own reward. It really is. I love the fact that people enjoy my books, but, really, the day I finish the final draft of a book and can say that I’m really done with it (at least for the moment :-) ), I’ve accomplished my goal. If it never sold a copy, I’d still feel fulfilled. I do care how it sells against other books, but I don’t really focus on it and don’t get caught up in any type of rivalries with other authors or other books.

Because I always want to write a better book than I wrote last time, I necessarily compete with my previous work and I compete against what I think the ideal technical book is. I think there’s enough room out there for lots of technical authors (it’s not as though people only buy one technical book and no others), and I have special empathy for my comrades out there who have to slog along in the middle of the night to crank their books out.

Denis: Where did the “Guru’s Guide” concept come from?

Ken: Wayne Snyder, one of the MVPs reviewing the manuscript for the first Guru’s Guide (which was at that time unnamed), wrote in the margin, “Hey, Ken, this is really a guru’s guide to solutions to hard T-SQL problems!” at which point the marketing folk at Addison-Wesley saw this and seized upon it. We had kicked around several titles, but hadn’t settled on any of them. As soon as they saw this, they pushed me hard to use it, and I reluctantly agreed. I didn’t like it initially because I thought the title of a technical book should focus on either the subject material or its intended audience, not its author. There was an understanding that we’d revisit the title when we did the second book (I was originally under contract to do three SQL Server books for Addison-Wesley), but then sales of the first book exploded, and there was no way we could change it at that point.

Denis: What do you think of all the accolades the Guru’s Guide books have received?

Ken: I am appreciative of them, but continue to be surprised by the longevity of the books and the reception they’ve garnered. I thought I was writing a niche book when I wrote that first Guru’s Guide book. I just wanted to get down everything I knew about T-SQL before I forgot it ;-). I will continue to write the kinds of books I like to read as long as people will buy them, so I hope that people continue to enjoy my work.

Denis: Will you be updating your Guru’s Guide books for SQL Server 2005? If so, when will they be out?

Ken: Yes. The second editions of the Guru’s Guide books should be out in 2007.

Denis: Describe your most unpleasant experience as an author.

Ken: I had a particularly unpleasant experience during the work on my architecture book when I had to send one of the technical reviewers packing. He was someone who’d provided useful feedback on my work in the past and someone I’d handpicked to review the book for technical issues. I usually appreciate negative feedback during the technical review process and generally consider it the most useful type of feedback, but this reviewer focused more on arguing with me about what should and shouldn’t be in the book than reviewing what was there for technical accuracy. He had a problem with the fact that I spent the first 300 pages of the book (the book ended up being over 1000 pages long) covering fundamental concepts (e.g., Windows internals) that I thought people needed to understand in order to understand the rest of the book.

I had seen people within Microsoft struggle to understand SQL Server internals because they did not have a good grasp of how Windows worked or how XML worked or how COM worked, or whatever, and, assuming readers would likely face the same types of challenges, I set out to remedy that in my book. I also wanted to go deeper than any SQL Server book ever had, and that necessitated being able to assume a certain amount of fundamental knowledge going in. I wrote him back after his first objection to the section and told him that, while I respected his opinion, I had my reasons for including it, and I explained those reasons as best I could.

He suggested I just refer people to authors like Richter and Solomon and those guys, and I told him I’d considered that, but that ultimately I felt that would be cutting corners and would be a huge inconvenience since readers would have to purchase several other books just to understand mine. No single other book had all the technical fundamentals I felt were essential, nor did any of them cover the material the way that I wanted it covered--in a manner that was designed especially for DBAs and database people. At the same time, most readers wouldn’t be able to skip the fundamentals coverage in some form or fashion because they wouldn’t be able to understand my SQL Server internals coverage without it. While it was certainly a huge amount of work for me to include this section (it was much like writing a whole separate book), I felt it was the right thing to do.

He persisted with his objections and continued to voice them not only to me but also to the editing team at Addison-Wesley. I told him on several occasions that I respected his opinion, but that, as the author, the call was mine to make and that I’d made it. This seemed to irritate him, and he continued to consume a certain amount of my time with correspondence related to the subject. At one point, I counted 7 separate threads from him on that one subject in my Inbox, and the folks at Addison-Wesley had begun to complain about him. The fundamentals section, and his negative remarks regarding it, came to dominate all the feedback we got from him. While other reviewers were either indifferent to the coverage of Windows internals in a SQL Server book (it was certainly a novel approach) or embraced it outright, he became increasingly more negative as we went along. We got useful feedback on the entirety of the manuscript from all the other reviewers, but he seemed unable to move on from the fundamentals issue. Eventually, I had my fill of dealing with him and cut him loose from the project. I’m a fairly patient person, but I just didn’t have time to deal with him anymore.

Technical reviewers sometimes get on crusades and attempt to usurp the role of the author to some extent. Until this happened, I’d never personally experienced it, but I’d heard of it. At the end of the day, the decision as to what is and isn’t in a book is the author’s to make, and the role of the technical reviewer is to identify technical issues with whatever it is that will be in the book. Decisions about content belong to the author, and, to a lesser extent, the publisher and the publisher’s editing team. I guess the lesson I learned here was to be more careful with whom I select for involvement with my projects. I always want honest feedback, and, fortunately, I know a lot of people who will happily point out every technical issue they find with my work without trying to become a de facto coauthor.

About the book:

Paperback: 456 pages

Publisher: Addison-Wesley; 1ST edition

Language: English

ISBN: 0321447743

Contents

Preface

Chapter 1 Waiting and Blocking Issues

Chapter 2 Data Corruption and Recovery Issues

Chapter 3 Memory Issues

Chapter 4 Procedure Cache Issues

Chapter 5 Query Processor Issues

Chapter 6 Server Crashes and Other Critical Failures

Chapter 7 Service Broker Issues

Chapter 8 SQLOS and Scheduling Issues

Chapter 9 Tempdb Issues

Chapter 10 Clustering Issues

Index

Thanks to Ken for answering all these questions and if there is one reason this year to buy your own holiday gift then SQL Server 2005 Practical Troubleshooting: The Database Engine is it

Amazon Links:

Monday, October 09, 2006

sys.identity_columns

This is the third(see edit below) catalog view that I will cover, I have already covered sys.dm_exec_sessions and sys.dm_db_index_usage_stats For a list of all the catalog views click here

EDIT: Obviously I need to get more sleep because sys.identity_columns is not one of the Dynamic Management Views

Today we will talk about the sys.identity_columns Object Catalog View

The view sys.identity_columns contains a row for each column that is an identity column

If you look at this view in Books On Line you will notice that there are only 4 columns described

seed_value

increment_value

last_value

is_not_for_replication

However when you run SELECT * FROM sys.identity_columns you get back 26 columns. My first impression was that the documentation was incomplete, however when you look closer you will see that the first thing mentioned is

[columns inherited from sys.columns] For a list of columns that this view inherits, see sys.columns (Transact-SQL).

So this view return 4 columns in addition to what the sys.columns view returns

Make sure that you are using SQL Server 2005 and are in the AdventureWorks database

Okay so let's start, the way I write about these views is that I don't want to just copy what is in BOL. I try to have a couple of queries that will show you how to accomplish some things by using these views

Let's select all columns that have the identity property set by using the sys.columns view

SELECT *

FROM sys.columns

WHERE is_identity =1

Now run the following query

SELECT *

FROM sys.identity_columns

As you can see you get back the same number of rows only you get 4 additional columns back which have some information that only deal with identity columns

EDIT: Someone left me a comment (thank you) and I decided to update the post, the following query

SELECT * FROM sys.columns

WHERE is_identity =1

will return a column names is_computed, but the following query below will not

SELECT *

FROM sys.identity_columns

Which makes sense since an identity column can not be computed

END EDIT

Let's try some other things, let's select only the columns that have a tinyint as an identity column, this is easy to do we just join with the sys.types view

SELECT i.*

FROM sys.identity_columns i JOIN sys.types t ON i.user_type_id = t.user_type_id

WHERE t.name ='tinyint'

Or let's see how many different integer data types have the identity property set

SELECT t.name,COUNT(*) as GroupedCount

FROM sys.identity_columns i JOIN sys.types t ON i.user_type_id = t.user_type_id

GROUP BY t.name

And the results are below

name GroupedCount

----------------------

bigint 3

int 43

smallint 3

tinyint 2

So that is all fine but how do we know what table this column belongs to? That's pretty easy to do we can just use the OBJECT_NAME function

--tinyint only

SELECT OBJECT_NAME(object_id) AS TableName,t.name,i.*

FROM sys.identity_columns i JOIN sys.types t ON i.user_type_id = t.user_type_id

WHERE t.name ='tinyint'

--smallint only

SELECT OBJECT_NAME(object_id) AS TableName,t.name,i.*

FROM sys.identity_columns i JOIN sys.types t ON i.user_type_id = t.user_type_id

WHERE t.name ='smallint'

There is a small problem with using OBJECT_NAME(object_id) to get the table name

We should be using this instead

SCHEMA_NAME(CAST(OBJECTPROPERTYEX(OBJECT_ID, 'SchemaId') AS INT))

+ '.' + OBJECT_NAME(OBJECT_ID) as FullTableName

Why am I doing this? This is because SQL server 2005 introduced schemas

so basically if we do this

SELECT * FROM HumanResources.Department

we have no problem, but if we run the following query

SELECT * FROM Department

we get this friendly message

Server: Msg 208, Level 16, State 1, Line 1

Invalid object name 'Department'.

Now let's create a Department table

CREATE TABLE Department (ID INT IDENTITY NOT NULL)

When we run this query

SELECT SCHEMA_NAME(CAST(OBJECTPROPERTYEX(OBJECT_ID, 'SchemaId') AS INT))

+ '.' + OBJECT_NAME(OBJECT_ID) AS FullTableName,

CASE t.name WHEN 'tinyint' THEN 255 -COALESCE(CONVERT(INT,last_value),0)

WHEN 'smallint' THEN 32767 -COALESCE(CONVERT(INT,last_value),0)

WHEN 'int' THEN 2147483647 -COALESCE(CONVERT(INT,last_value),0)

WHEN 'bigint' THEN 9223372036854775807 -COALESCE(CONVERT(INT,last_value),0)

END AS ValuesLeft ,

OBJECT_NAME(object_id) as TableName,t.name,i.*

FROM sys.identity_columns i JOIN sys.types t ON i.user_type_id = t.user_type_id

WHERE seed_Value > 0

AND OBJECT_NAME(object_id) like 'DE%'

ORDER BY TableName

We will see two rows

dbo.Department 2147483647 Department int

HumanResources.Department 32751 Department smallint

One table is dbo.Department and the other table is HumanResources.Department

Now when we run the same queries again there is no problem

SELECT * FROM Department

SELECT * FROM HumanResources.Department

You can read up more on schemas in BOL, let's get back to sys.identity_columns

let's do something interesting now, let's find out how many more identity values we have left until we run out

SELECT SCHEMA_NAME(CAST(OBJECTPROPERTYEX(OBJECT_ID, 'SchemaId') AS INT))

+ '.' + OBJECT_NAME(OBJECT_ID) AS FullTableName,

CASE t.name WHEN 'tinyint' THEN 255 -COALESCE(CONVERT(INT,last_value),0)

WHEN 'smallint' THEN 32767 -COALESCE(CONVERT(INT,last_value),0)

WHEN 'int' THEN 2147483647 -COALESCE(CONVERT(INT,last_value),0)

WHEN 'bigint' THEN 9223372036854775807 -COALESCE(CONVERT(INT,last_value),0) END AS ValuesLeft ,

OBJECT_NAME(object_id) as TableName,t.name,i.*

FROM sys.identity_columns i JOIN sys.types t ON i.user_type_id = t.user_type_id

WHERE seed_Value > 0

ORDER BY FullTableName

You can also calculate the percentage used

SELECT SCHEMA_NAME(CAST(OBJECTPROPERTYEX(OBJECT_ID, 'SchemaId') AS INT))

+ '.' + OBJECT_NAME(OBJECT_ID) AS FullTableName,

CASE t.name WHEN 'tinyint' THEN 100 -(255 -COALESCE(CONVERT(INT,last_value),0)) /255.0 * 100

WHEN 'smallint' THEN 100 -( 32767 -COALESCE(CONVERT(INT,last_value),0)) /32767.0 * 100

WHEN 'int' THEN 100 -(2147483647 -COALESCE(CONVERT(INT,last_value),0)) /2147483647.0 * 100

WHEN 'bigint' THEN 100 -(9223372036854775807 -COALESCE(CONVERT(INT,last_value),0)) /9223372036854775807.0 * 100

END AS PercentageUsed ,

OBJECT_NAME(object_id) as TableName,t.name,i.*

FROM sys.identity_columns i JOIN sys.types t ON i.user_type_id = t.user_type_id

WHERE seed_Value > 0

ORDER BY PercentageUsed DESC

Now let's find all the columns where the seed value is not 1

SELECT seed_value,SCHEMA_NAME(CAST(OBJECTPROPERTYEX(OBJECT_ID, 'SchemaId') AS INT))

+ '.' + OBJECT_NAME(OBJECT_ID) AS FullTableName,i.*

FROM sys.identity_columns i JOIN sys.types t ON i.user_type_id = t.user_type_id

WHERE seed_value > 1

ORDER BY FullTableName

Now let's find all the columns where the increment value is not 1