Here are some interesting articles I read and tweeted about this past week, I think you will like these as well. If you are bored this weekend, some of these might be good for you to read

Transaction Commit latency acceleration using Storage Class Memory in Windows Server 2016/SQL Server 2016 SP1

SQL Server 2016 SP1 adds a significant new performance feature, the ability to accelerate transaction commit times (latency) by up to 2-4X, when employing Storage Class Memory (NVDIMM-N nonvolatile storage). This scenario is also referred to as “persistent log buffer” as explained below.

This enhancement is especially valuable for workloads which require high frequency, low latency update transactions. These app patterns are common in the finance/trading industry as well as online betting and some process control applications.

As we all wind down for the 2016 holiday season, we want to give the SQL Server community a holiday gift to say ‘thank you’ for all your support during 2016, and what better gift than more free content?!

As many of you know, I publish a bi-weekly newsletter to more than 13,500 subscribers that contains an editorial on a SQL Server topic, a demo video, and a book review of my most recently completed book. We’re making all the 2015 demo videos available so everyone can watch them – 25 videos in all, mostly in WMV format. I did the same thing the last few years for the

2014 videos,

2013 videos,

2012 videos, and

2011 videos.

Developers Choice: Query progress – anytime, anywhere

One of the most important actions when a performance issue hits, is to get precise understanding on the workload that’s executing and how resource usage is being driven. The actual execution plan is an invaluable tool for this purpose.

Query completion is a prerequisite for the availability of an actual query plan, but with LQS (

Live Query Statistics), you can already get information about in-flight query executions (see

this blog post), which is especially useful for long running queries, and queries that run indefinitely and never finish.

To look at in-flight query executions, the

query execution statistics profile infrastructure must be enabled on demand.

Get All of the Freebies for “End-to-End Troubleshooting Checklist for Microsoft SQL Server”

One of my most enduring and popular presentations is called End-to-End Troubleshooting Checklist for Microsoft SQL Server”. In this presentation, I take you through my six-step checklist from detection of a performance issue on SQL Server through identification of the root cause to remediation and finally post-mortem steps to ensure the problem is prevented (or at least detected immediately) in the future.

Over the years, I’ve had many inquiries about the slides, T-SQL scripts, and additional troubleshooting information. I’m happy to report that I’ve finally collated all of the associated content from that presentation!

Selective XML Indexes: Not Bad At All

So what is a Selective XML index? It’s an index! For XML! Where you pick the parts of the XML to index. Other XML indexes are rather all or nothing, and can end up being pretty huge, depending on the size of your documents. I think they’re at least size of data, if I recall correctly. Let’s take a look at some examples.

sp_WhatsupQueryStore

The sp_WhatsupQueryStore Stored Procedure is a Microsoft SQL Server Stored Procedure that retrieves all kinds of information from the Query Store. By running the script on this website the sp_WhatsupQueryStore Stored Procedure gets installed in the "master" database of your SQL Server Instance.

After installation you can run the Stored Procedure as follows:

EXEC sp_WhatsupQueryStore @dbname, @timewindow, @topqueries, @return_store_config, @return_forced_plans, @return_top_duration, @return_top_cpu, @return_top_log_read, @return_top_log_write, @return_top_phys_read

Clustered Columnstore Index in Azure SQL Database

Columnstore index is the preferred technology to run analytics queries in Azure SQL Databases. We recently

announced general availability if In-Memory technologies for all Premium databases. Similar to

In-Memory OLTP, the columnstore index technology is available in premium databases.

The columnstore technology is available in two flavors; clustered columnstore index (CCI) for DataMart analytics workloads and nonclustered columnstore index (NCCI) to run analytics queries on operational (i.e. OLTP) workload. Please refer to

NCCI vs CCI for the differences between these two flavors of columnstore indexes. The columnstore index can speed up the performance of analytics queries up to 100x while significantly reducing the storage footprint. The data compression achieved depends on the schema and the data, but we see around 10x data compression on average when compared to rowstore with no compression. This blog will focus on Analytic workloads using CCI but cover NCCI in a future blog.

When Did SQL Server Last Update That Statistic? How Much Has Been Modified Since? And What Columns are in the Stat?

Whether I’m working as a DBA, a consultant, a teacher, or just answering questions in my inbox, I always end up needing a script to inspect statistics one way or another.

Here are some freshly written scripts for a classic DBA question: what’s going on in my stats?

SQL Server + Java: What’s new

Java continues to be one of the most widely used programming languages for a variety of application scenarios and industries. The Microsoft JDBC Driver for SQL Server is used to connect Java applications to SQL Server, whether SQL Server is hosted in the cloud or on-premises, or provided as a platform-as-a-service.

With the release of

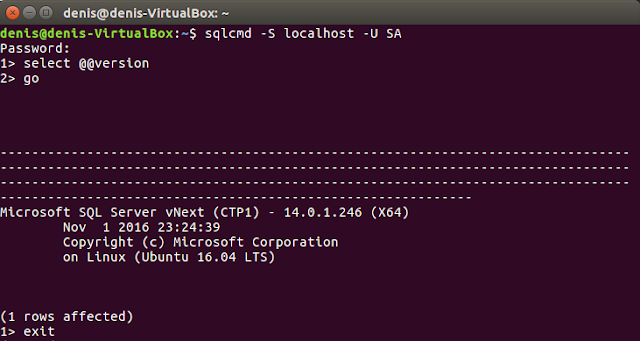

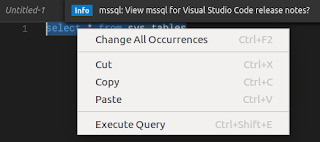

SQL Server v.Next public preview on Linux and Windows, the ability to connect to SQL Server on Linux, Windows, Docker or macOS (via Docker) makes cross-platform support for all connectors, including the JDBC driver, even more important. To enable Java developers to use the newest SQL Server features, we have been updating the JDBC driver with client-side support for new features, including Always Encrypted and Azure Active Directory Authentication.

Microsoft R Server 9.0 now available

Microsoft R Server 9.0, Microsoft's R distribution with added big-data, in-database, and integration capabilities, was

released today and is now

available for download to MSDN subscribers. This latest release is built on

Microsoft R Open 3.3.2, and adds new machine-learning capabilities, new ways to integrate R into applications, and additional big-data support for Spark 2.0.

This release includes a brand new R package for machine learning:

MicrosoftML. This package provides state-of-the-art, fast and scalable machine learning algorithms for common data science tasks including featurization, classification and regression. Some of the

functions provided include:

- Fast linear and logistic model functions based on the Stochastic Dual Coordinate Ascent method;

- Fast Forests, a random forest and quantile regression forest implementation based on FastRank, an efficient implementation of the MART gradient boosting algorithm;

- A neural network algorithm with support for custom, multilayer network topologies and GPU acceleration;

- One-class anomaly detection based on support vector machines.

You can learn more about MicrosoftML at this

live webinar on Wednesday, December 14.

Released: Public Preview for Microsoft Azure SQL Database Management Pack (6.7.11.0)

We are working on significantly updating the Management Pack for Azure SQL Database.

This release will bring support for Elastics Pools and Azure AD Authentication among other new features. We are also working on handling monitoring of larger number of databases. We are expecting to improve the scale by the time we RTM. Here are some numbers to give you an idea for this public preview:

Improve query performance on memory optimized tables with Temporal using new index creation enhancement in SP1

With the introduction of the

Temporal feature in SQL 2016 and Azure SQL Database, there is an ability to time travel through the state of data as it was at any given point of time. Alongside In-Memory OLTP,

Temporal on memory optimized tables allows you to harness the speed of In-Memory OLTP, and gives you the same ability to track history and audit every change made to a record. Temporal added to memory optimized tables also allows you to maintain a “smaller” memory optimized tables and thereby a smaller memory footprint by deleting data that isn’t “hot” anymore from the current memory optimized table, which in turn moves it to the history table without having an external archival process to do that.

When memory optimized and temporal tables are combined, an internal memory optimized table is created in addition to the history table, as depicted in the diagram below. Data is flushed asynchronously from the internal in-memory History table to the disk based history table. The flush interval isn’t currently configurable. Data is flushed when the internal table reaches 8% of the memory consumed by the current table, OR you can flush it manually by executing the procedure

sys.sp_xtp_flush_temporal_history. The internal memory optimized table is created with the same column definitions as the current in-memory table, but with a single index.

Extended per-operator level performance stats for Query Processing

As

requested by the community to complete the per-operator information, starting with SQL Server 2016 SP1 we are now exposing memory grant per grant iterator (such as Sorts and Hash Matches). These give you added insight into memory grants, and how overall memory usage is driven throughout execution.

In the October release of SSMS we

exposed per-operator stats directly in the Properties window for each operator, but the memory grant properties are not there yet. These will be added to the properties window in an upcoming release of SSMS